In February, at an event at the 92nd Street Y’s Unterberg Poetry Center in New York, while sharing the stage with my fellow British writer Martin Amis and discussing the impact of screen-based reading and bidirectional digital media on the Republic of Letters, I threw this query out to an audience that I estimate was about three hundred strong: “Have any of you been reading anything by Norman Mailer in the past year?” After a while, one hand went up, then another tentatively semi-elevated. Frankly I was surprised it was that many. Of course, there are good reasons why Mailer in particular should suffer posthumous obscurity with such alacrity: his brand of male essentialist braggadocio is arguably extraneous in the age of Trump, Weinstein, and fourth-wave feminism. Moreover, Mailer’s brilliance, such as it was, seemed, even at the time he wrote, to be sparks struck by a steely intellect against the tortuous rocks of a particular age, even though he labored tirelessly to the very end, principally as the booster of his own reputation.

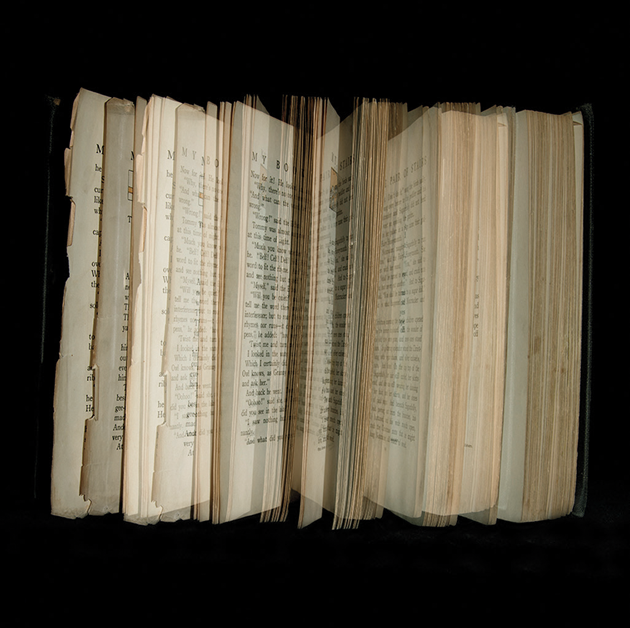

Photograph by Ellen Cantor from her Prior Pleasures series © The artist. Courtesy dnj Gallery, Santa Monica, California

It’s also true that, as J. G. Ballard sagely remarked, for a writer, death is always a career move, and for most of us the move is a demotion, as we’re simultaneously lowered into the grave and our works into the dustbin. But having noted all of the above, it remains the case that Mailer’s death coincided with another far greater extinction: that of the literary milieu in which he’d come to prominence and been sustained for decades. It’s a milieu that I hesitate to identify entirely with what’s understood by the ringing phrase “the Republic of Letters,” even though the overlap between the two was once great indeed; and I cannot be alone in wondering what will remain of the latter once the former, which not long ago seemed so very solid, has melted into air.

What I do feel isolated in—if not entirely alone in—is my determination, as a novelist, essayist, and journalist, not to rage against the dying of literature’s light, although it’s surprising how little of this there is, but merely to examine the great technological discontinuity of our era, as we pivot from the wave to the particle, the fractal to the fungible, and the mechanical to the computable.* I first began consciously responding, as a literary practitioner, to the manifold impacts of BDDM in the early 2000s—although, being the age I am, I have been feeling its effects throughout my working life—and I first started to write and speak publicly about it around a decade ago. Initially I had the impression I was being heard out, if reluctantly, but as the years have passed, my attempts to limn the shape of this epochal transformation have been met increasingly with outrage, and even abuse, in particular from my fellow writers.

As for my attempts to express the impact of the screen on the page, on the actual pages of literary novels, I now understand that these were altogether irrelevant to the requirement of the age that everything be easier, faster, and slicker in order to compel the attention of screen viewers. It strikes me that we’re now suffering collectively from a “tyranny of the virtual,” since we find ourselves unable to look away from the screens that mediate not just print but, increasingly, reality itself.

I think there are several explanations for the anger directed against anyone who harps on the message of the new media, but in England it feels like a strange inversion of what sociologists term “professional closure.” Instead of making the entry to literary production and consumption more difficult, embattled writers and readers, threatened by the new means of literary production, are committing strange acts of professional foreclosure: holding fire sales of whatever remains of their unique and nontransferable skills—as vessels of taste (and, therefore, the canon) and, most notably, as transmitters and receivers of truths that in many instances had endured for centuries. As I survey the great Götterdämmerung of the Gutenberg half-millennium, what comes to mind is the hopeful finale to Ray Bradbury’s Fahrenheit 451. When Guy Montag, the onetime “fireman” or burner of books by appointment to a dystopian totalitarian state, falls in among the dissident hobo underclass, he finds them to be the saviors of those books, each person having memorized some or all of a lost and incalculably precious volume—one person Gulliver’s Travels; another the Book of Ecclesiastes.

In March, I gave an interview to the Guardian in which I repeated my usual—and unwelcome—assertion that the literary novel had quit center stage of our culture and was in the process, via university creative writing programs, of becoming a conservatory form, like the easel painting or the symphony. Furthermore, I fingered BDDM as responsible for this transformation, the literary novel being simply the canary forced to flee this particular mine shaft first. The response was a Twitter storm of predictable vehemence and more than seven hundred comments on the Guardian’s website, which, reading over preparatory to writing this article, I found to display all the characteristics of the modern online debate: willful misunderstanding and misinterpretation of my original statements, together with criticism that is either ad hominem or else transparently animated by a superimposed ideological cleavage.

Among the relentless punning on my name—selfish, self-obsessed, self-regarding, etc., etc.—there are a few cogent remarks. Some critics comment that the literary novel has always been a marginal cultural form; others, that its torchbearers are now from outside the mainstream Western tradition, either by reason of ethnicity, heritage, sex, or sexual orientation. Both these and others aver that the novel form is alive and kicking, that paperback sales have actually risen in the past few years, and that readers are rediscovering the great virtues of the printed word as a media technology.

All of these viewpoints I’m more than willing to entertain. What I’m less prepared to consider are arguments that begin by quoting sales figures that show the sales of printed books increasing and those of e-books in decline. The printed books being sold are not the sort of difficult reading that spearheads knowledge transfer but picture books, kidult novels like the Harry Potter series, and, in the case of my own UK publisher at least, a great tranche of spin-off books by so-called vloggers (a development Marshall McLuhan anticipated when he noted that new media always cannibalize the forms of the past). As for the decline in e-books, this is because the screen is indeed not a good vehicle for the delivery of long-form prose; and anyway the printed literary novel (or otherwise challenging text) is competing not with its digital counterparts but with computer-generated games, videos, social media, and all the other entertainments the screen affords. Moreover, I’d wager that the upsurge in printed-book sales, such as it is, is due to greater exports and the continuation of, in the West at least, a strange demographic reversal, since for the first time in history we have societies where the old significantly outnumber the young.

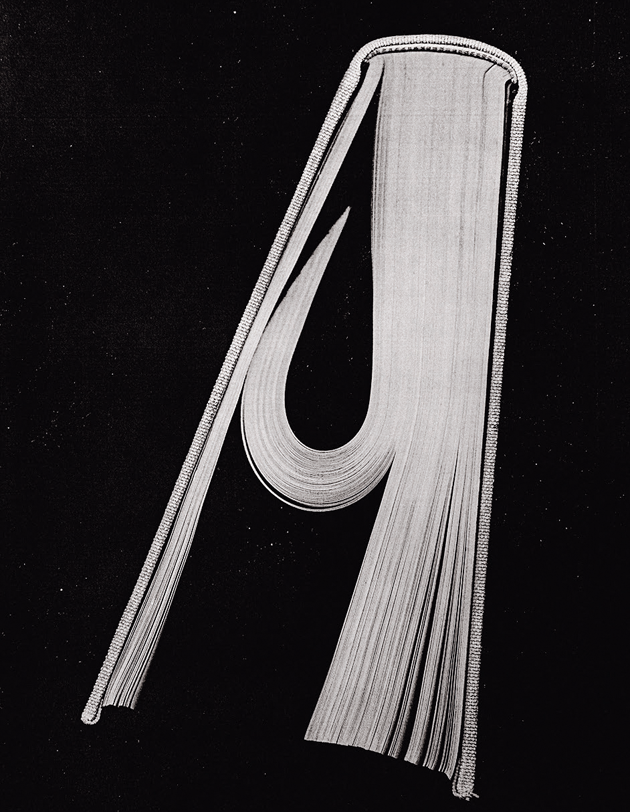

“Hook Book,” by Mary Ellen Bartley © The artist. Courtesy Yancey Richardson Gallery, New York City

England is a little-big country, simultaneously cosmopolitan and achingly parochial, which means there tends to be only one person assigned to each role. For years I was referred to as “the enfant terrible” of English letters—a title once bestowed on Martin Amis. But while with advancing years this ascription has obviously become ridiculous, I haven’t been bootstrapped—like him—to the position of elder literary statesman, but booted sideways. As a commentator on the Daily Telegraph’s website puts it:

When I see Will Self’s name trending on social media, I feel . . . that the old boy has expressed some opinion that I will broadly agree with, but because he has become such a toxic figure his pronouncements manage to alienate his natural allies and strengthen the cause of his enemies.

Be all this as it may, in December of last year an authoritative report was delivered on behalf of Arts Council England. Undertaken by the digital publisher Canelo, it compiled and analyzed sales data from Nielsen BookScan demonstrating that between 2007 and 2011 hardcover fiction sales in England slumped by 10 million pounds (approximately $13 million), while paperback fiction suffered still more, with year-on-year declines almost consistently since 2008. In one year alone, 2011–12, paperback fiction sales fell by 26 percent. Sarah Crown, the current head of literature for the ACE, commenting on the report to the Guardian, said,

It would have been obviously unnecessary in the early 1990s for the Arts Council to consider making an intervention in the literary sector, but a lot has changed since then—the internet, Amazon, the demise of the Net Book Agreement—ongoing changes which have had a massive effect.

And so 2018 will see a ramping-up of the British government’s effective support of literary fiction—in some ways a return to the period before the 1990s, when the Net Book Agreement (which disallowed publishers from discounting) helped to support independent publishers, booksellers, and indeed authors.

In the United States there’s been a far more consistent and public critique of BDDM’s impact on literature, on education, on culture, and on cognition generally. Nicholas Carr’s two books, The Shallows, on the internet, and The Glass Cage, on automation (and, by extension, artificial intelligence), are examples of this genre: painstaking and lucid, adducing evidence widely and braiding it into narratives that possess by themselves the literary qualities they seek to wrest from the oblivion of digitization. There is, of course, a great deal of empirical research concerning the impact of screen media on the mind/brain, but for this essay I concentrated on a couple of academic papers coauthored by a Norwegian education researcher, Professor Anne Mangen of the Norwegian Reading Centre, based at the University of Stavanger. One paper is titled “Lost in an iPad: Narrative Engagement on Paper and Tablet”; the other, “Reading Linear Texts on Paper Versus Computer Screen: Effects on Reading Comprehension.” The papers covered two studies, one conducted with Canadian university students in 2014, the other in 2013 with Norwegian high school students, in which screen reading and paper reading were compared. Mangen’s work is cited by Carr in The Shallows, as are an impressive number of other papers summarizing studies attempting to tease out the impacts on the human subject of screen—as opposed to page—interactions.

The references for Mangen’s two papers give an impression of a rapidly burgeoning field, with studies being conducted all over the world, leading to papers with titles such as “Effect of Light Source, Ambient Illumination, Character Size and Interline Spacing on Visual Performance and Visual Fatigue with Electronic Paper Displays” and “The Emerging, Evolving Reading Brain in a Digital Culture: Implications for New Readers, Children with Reading Difficulties, and Children Without Schools.” In The Shallows, Carr refers to a study by one scholar with findings to the effect that the digitization of academic papers, far from coinciding with a greater breadth of reference, has led to a sort of clumping effect, whereby unto the papers most cited will be given yet more citations. We might think of this as the Google-ization of scholarship and academia generally, since it mirrors the way the search engine’s algorithms favor ubiquity over intrinsic significance.

It’s probably the case that interested parties lurk behind many of these studies, at least those that show the impact of screen as against page to be effectively negligible, or compensated for by improvements in other areas of human cognition. I certainly don’t think Professor Mangen’s work is at all compromised in this fashion; however, reading through the elaborate methodologies she and her collaborators devised for these comparisons—ones that attempt the creation of laboratory conditions and the imposition of a statistical analysis on data that is hopelessly fuzzy—I became more and more perplexed. Who, I thought, besides a multidisciplinary team in search of research funding, could possibly imagine that a digital account of the impact of reading digital print on human cognition would be effective? For such an account rests on the supremacy of the very thing it seeks to counteract, which can be summarized as a view of the human mind/brain that is itself computational in form. Whereas for literature to be adequately defended, its champions must care, quixotically, not at all for the verdict of history, while uttering necessary and highly inconvenient truths—at least for the so-called FANG corporations: Facebook, Amazon, Netflix, and of course Google, whose own stated ambition to digitize all the world’s printed books constitutes a de facto annihilation of the entire Gutenberg era.

Mangen’s statistical analysis of questionnaire responses filled in by cohorts of tablet or e-book readers does establish that under certain controlled circumstances reading on paper affords important advantages—allowing for greater retention of information and comprehension of narrative than digital reading. We can quibble about swiping versus scrolling, the lengths of the texts employed in the studies, the formulation of questionnaire items, and the significance—or insignificance—of the respondents’ perception of these texts as factual or fictional. We can also point out that laboratory conditions simply cannot replicate the way our use of BDDM is now so completely embedded in our quotidian lives that to attempt to isolate a single mode—such as digital reading—and evaluate its cognitive effects is quite impossible.

Mangen’s papers suggest that as our capacity for narrative engagement is compromised by new technology, we experience less “transportation” (the term for being “lost” in a piece of writing), and as a further consequence become less capable of experiencing empathy. Why? Because evidence from real-time brain scans tells us that when we become deeply engaged with reading about Anna Karenina’s adultery, at a neural level it looks pretty much as if we were committing that adultery ourselves, such is the congruence in the areas of the brain activated. Of course, book lovers have claimed since long before the invention of brain scanning that identifying with characters in stories makes us better able to appreciate the feelings of real live humans, though this certainly seems to be a case of correlation being substituted for causation. People of sensibility have always been readers, which is by no means to say reading made them so—and who’s to say my empathy centers don’t light up when Anna throws herself to her death because I take a malevolent satisfaction in her ruin?

Carr’s The Shallows reaches a baleful crescendo with this denunciation: the automation of our own mental processes will result in “a slow erosion of our humanness and our humanity” as our intelligence “flattens into artificial intelligence.” Which seems to me a warning that the so-called singularity envisaged by techno-utopians such as Ray Kurzweil, a director of engineering at Google, may come about involuntarily, simply as a function of our using our smartphones too much. Because the neuroscientific evidence is now compelling and confirmed by hundreds, if not thousands, of studies: our ever-increasing engagement with BDDM is substantially reconfiguring our mind/brains, while the new environments it creates—referred to now as code/space, since these are locations that are themselves mediated by software—may well be the crucible for epigenetic changes that are heritable. Behold! The age of Homo virtualis is quite likely upon us, and while this may be offensive to those of us who believe humans to have been made in God’s image, it’s been received as a cause for rejoicing for those who believe God to be some sort of cosmic computer.

But what might it be like to limit or otherwise modulate our interaction with BDDM so as to retain some of the cognitive virtues of paper-based knowledge technology? Can we envisage computer-human interactions that are more multisensory so as to preserve our awareness and our memory? For whatever publishers may say now about the survival of print (and with it their expense accounts), the truth is that the economies of scale, production, and distribution render the spread of digital text ineluctable, even if long-form narrative prose were to continue as one of humanity’s dominant modes of knowledge transfer. But of course it won’t. It was one of Marshall McLuhan’s most celebrated insights that the mode of knowledge transfer is more significant than the knowledge itself: “The medium is the message.”

For decades now, theorists from a variety of disciplines have been struggling to ascertain the message of BDDM considered as a medium, a task hampered by its multifarious character: Is it a television, a radio, an electronic newspaper, a videophone, or none of the above? McLuhan distinguished confusingly between “hot” and “cold” media—ones that complement human perception and ones that provoke human sensual imagination—but BDDM holds out the prospect of content that’s terminally lukewarm: a refashioning of perception that will render imagination effectively obsolete. In place of the collective, intergenerational experience of reading Moby Dick, centuries-long virtualities will be compressed to the bandwidth of real time and fed to us via headsets and all-body feely suits, “us” being the operative word. With a small volume in your pocket, you may well wander lonely as a cloud, stop, get it out, and read about another notionally autonomous wanderer. But the Prelude Experience, a Wordsworthian virtual-reality program I invented just this second, requires your own wearable tech, the armies of coders—human or machine—necessary to write it, and the legions of computers, interconnected by a convolvulus of fiber-optic cabling, needed to make it seem to happen.

What the critics of BDDM fear most is the loss of their own autonomous Gutenberg minds, minds that, like the books they’re in symbiosis with, can be shut up and put away in a pocket, minds the operations of which can be hidden from Orwellian surveillance. But the message of BDDM is that the autonomous human mind was itself a contingent feature of a particular technological era. Carr—representative in this, as in so many other areas—wants there to be wriggle room, yet his critique of BDDM rests firmly on technological determinism: humans don’t devise technologies to solve particular problems, nor are those technologies “value neutral” in terms of their impact but rather “monkey see, monkey do.” Technologies arise spontaneously and spread mimetically, in the process acting reciprocally on the minds they’re born of.

So what does this wriggling look like? Well, it looks like programs devised to stop computer users from going online; it looks like stressed-out parents, worried by poor academic performance, trying to police their children’s online behavior. It looks like the “slow” movements devised to render everything from transportation to cuisine more mindful. It looks, in short, like a cartoon I remember from Mad magazine back in the early 1970s in which hairy proto-eco warriors are mounting a demonstration against the infernal combustion engine: “Okay. . . . Let’s get the motorcade rolling!” shouts one of the organizers—and they all clamber into their gas-guzzlers and roar away in a cloud of lead particulate and carbon monoxide. Why? Because the very means we have of retreating from BDDM are themselves entirely choked by its electro-lianas. Sure, some wealthy parents will be able to create wriggle room, within which their conservatory-educated offspring can fashion old-style literary novels, but this will have all the cultural significance of hipsters with handlebar mustaches brewing old ales. The philosopher John Gray was, I suspect, being more perspicacious when he said that in the future—the very near future, it transpires—privacy itself will become a luxury, and by privacy he means not simply the ability to avoid being surveilled—in the real world as well as the virtual one—but the wherewithal to maintain what Emily Dickinson termed “an equal and separate center of self.” The self we perceive to be in a symbiotic relation with the printed word.

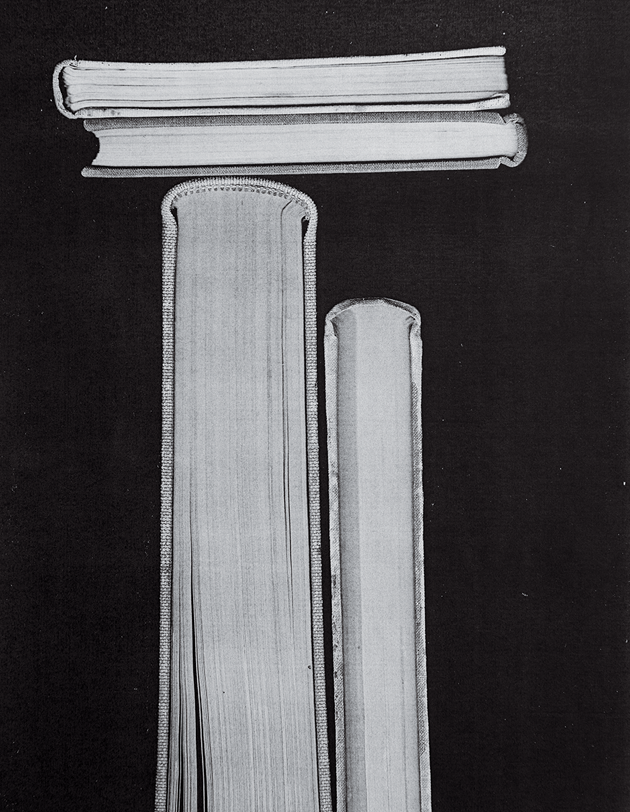

“Book Balance,” by Mary Ellen Bartley © The artist. Courtesy Yancey Richardson Gallery, New York City

Which returns me, suitably enough, to the much-punned-upon self that’s my own. In conversation and debate with those who view the inception of BDDM as effectively value neutral—and certainly not implicated in the wholesale extinction of literary culture—I run up time and again against that most irrefutable body of evidence: the empirical sample of one. Marx may have said that history is made by the great mass of individuals, but these individuals pride themselves on their ahistorical position: they (and/or their children) read a lot, and they still love books, and they prefer to read on paper—although they may love reading on their Kindle as well. The point is, they tell me angrily at whatever literary reading or lecture it is that I’m delivering, that rumors of the serious novel’s death are, as ever, greatly exaggerated.

Well, I have considerable sympathy for their position—I, too, constitute an empirical sample of one. However, the study I’ve embarked on these past thirty-odd years, using my sole test subject, has led me to rather different conclusions. If I didn’t find screen-based writing difficult to begin with, or a threat to my sense of the fictive art, it’s simply because early computers weren’t networked until the mid-1990s; and until the full rollout of wireless broadband, around a decade later, the connection was made only with electro-banging and hissing difficulty. I didn’t return to writing on a manual typewriter in 2004, because apart from a little juvenilia I’d never written anything long on one before. And I didn’t even consciously follow this course; it just seemed instinctively right. If there are writers out there who have the determination—and concentration—to write on a networked computer without being distracted by the worlds that lie a mere keystroke away, then they’re far steelier and more focused than I. And if, further, they’re able to be transported sufficiently by their own word stream to avoid the temptation to research online in medias res, then, once again, I’m impressed.

For me, what researchers into the impact of screen reading term “haptic dissonance” (the disconnect between the text and the medium it is presented in) grew worse and worse throughout the 2000s. As did the problem of thinking of something I wished to write about—whether it be an object or something intangible—and then experiencing a compulsion to check its appearance or other aspects online. I began to lose faith in the power of my own imagination, and realized, further, that to look at objects on a screen and then describe them was, in a very important sense, to abandon literature, if by this is understood an art form whose substrate is words alone. For to look at an image and then describe it isn’t thinking in words but mere literalism. As for social media, I was protected from it initially by my own notoriety: far from wanting more contact with the great mass of individuals, having been a cynosure of sorts since my early thirties, I desired less. At an intuitive level I sensed that the instantaneous feedback loops between the many and the few that social media afforded were inimical to the art of fiction, which to a large extent consists in the creation of one-to-one epiphanies: “Oh,” we exclaim as readers, “I’ve always felt that way but never seen it expressed before.” And then we cleave to this new intimacy, one shorn of all the contingencies of sex, race, class, and nationality. By contrast with the anonymous and tacit intimacy to be found between hard covers, social media is all about stridently identified selves—and not simply to one another but to all. In the global village of social media it’s precisely those contingent factors of our identities—our sex, our race, our class, our nationality—that loom largest; no wonder it’s been the medium that has both formed and been formed by the new politics of identity.

At the level of my person, and my identity, though, I’ve been striving for the past fourteen years to make the entire business of literary composition more apprehensible: the manual typewriter keeps me bound to sheets of paper that need to be ordinally arranged, for no matter how flimsy, they’re still objects you can hold and touch and feel. Nowadays, I go still further: writing everything longhand first, then typing it up on the manual, and only then keying it into a computer file—a process that constitutes another draft. If readers need to know where they are in a text, and to use this information to aid their grasp on the narrative and their identification with the characters, then how much more important is this for a writer? Not much more. Because readers and writers are so tightly dependent on each other, it’s specious to make the distinction—indeed, I’d assert that there’s a resonance between the act of writing and the act of reading such that the understanding of all is implicated in that of each. Yet to begin with I noticed nothing but benefits from my own screen-based reading—thousands of texts available instantaneously; the switching among them well-nigh effortless; and the availability of instant definitions, elucidations, and exegeses. Researchers hypothesize, and here I quote from Mangen’s “Lost in an iPad,” that “reading a novel on a tablet or e-reader doesn’t feel like what reading a novel should feel like.” Far from being bothered by this, however, I embraced it, and soon found myself no longer reading texts of all kinds in quite the same way; moreover, my sense of them being discrete began to erode, as I seamlessly switched from fact to fiction, from the past to the present, from the concrete to the theoretical and back again. Reading on paper, I had a tendency to have maybe ten or twelve books “on the go” at once. Reading digitally, this has expanded to scores, hundreds even.

In the world of the compulsive e-reader, which I have been for some years now, on a bad day it feels as if you’re suffering from a Zen meditational disease, and that all the texts ever written are ranged before your eyes, floating in space—ones you’ve read and know, ones you haven’t read and yet also know, and ones you’ve never even imagined existed before—despite which you instantaneously comprehend them, as you feel sure you would any immaterial volume you pulled from the equally immaterial shelves of this infinite, Borgesian library of silent babel. But precisely what’s lost in this realm—one that partakes of the permanent “now” that is intrinsic to the internet—is the coming into being of texts and their disappearance into true oblivion. As it is to humans, surely it is to the books that we make in our image: a book that lives forever has no real soul, for it cannot cry out in Ciceronian fashion, “O tempora, o mores!” only tacitly and shiningly endure.

The canon itself requires redundancy as well as posterity, which returns us, neatly if not happily, to Norman Mailer. Of all the dead white men whose onetime notoriety is now seen as a function of time and custom rather than intrinsic worth, Mailer is probably one of the worst offenders. His behavior was beyond reprehensible—and, let’s face it, his books weren’t even that good. Why did I light on him, when we’ve been witnessing the twilight of these idols for years now, as the postwar generation of male American writers shuffles toward their graves, their once proud shoulders now garlanded round with the fading laurels of their formerly big-dicked swagger? Possibly in order to galvanize my readers’ critical faculties: many of you will object—along with the Guardian commenters—quite rightly to the view that a literary culture is sliding into oblivion, on the grounds that another is arising, beautiful and phoenix-like, from its ashes.

For are we not witnessing a flowering of talent and a clamor of new voices? Is not women’s writing and writing by those coming from the margins receiving the kind of attention it deserved for years but was denied by this heteronormative, patriarchal, paper-white hegemony? One of the strongest and most credible counters to the argument that in transitioning from page to screen we lost the art forms that arose in and were dependent on that medium is that this is simply the nostalgia of a group who for too long occupied the apex of a pyramid, the base of which ground others into the dust. Younger and more diverse writers are now streaming into the public forums—whether virtual or actual—and populating them with their stories, their realities; and all this talk of death by iPad is simply the sound of old men (and a few women) shaking their fists at the cloud.

In this historical moment, it perhaps seems that the medium is irrelevant; the prevalence of creative writing programs and the self-published online text taken together seem to me to represent a strange new formula arrived at by the elision of two aphoristic observations: this permanent Now is indeed the future in which everyone is famous for fifteen minutes—and famous for the novel that everyone was also assured they had in them. How can the expansion of the numbers writing novels—or at least attempting to write them—not be a good thing? Maybe it is, but it’s not writing as we knew it; it’s not the carving out of new conceptual and imaginative space, it’s not the boldly solo going but rather a sort of recursive quilting, in which the community of writers enacts solidarity through the reading of one another’s texts.

For in a cultural arena such as this, the avant-garde ends mathematically, as the number of writers moves from being an arithmetic to a geometric coefficient of the number of readers. We know intuitively that we live in a world where you can say whatever you want—because no one’s listening. A “skeuomorph” is the term for a once functional object that has, because of technological change, been repurposed to be purely decorative. A good example of this is the crude pictogram of an old-fashioned telephone that constitutes the “phone” icon on the screen of your handheld computer (also known, confusingly, as a smartphone).

Skeuomorphs tend, for obvious reasons, to proliferate precisely at the point where one technology gives ground to another. I put it to you that the contemporary novel is now a skeuomorphic form, and that its proliferation is indeed due to this being the moment of its transformation from the purposive to the decorative. Who is writing these novels, and what for, is beside the point. Moreover, the determination to effect cultural change—by the imposition of diversity quotas—is itself an indication of printed paper’s looming redundancy. Unlike the British novelist Howard Jacobson, who recently took it upon himself to blame the reader for the decline in sales of literary fiction, rather than the obsolescence of its means of reproduction, I feel no inclination to blame anyone at all: this is beyond good and evil.

What’s significant about #MeToo and #BlackLivesMatter is that they’re movements that are designated by the scriptio continua of BDDM, with the addition of hashtags. What will become of the unicameral mind, convinced of its own autonomy, that the novel and other long-form prose mirrored? For I do believe this transformation is about who we are as much as about what and how we read. The answer is, I’ve no idea, but the indications are that, as ever in the affairs of humankind, new technologies both exacerbate the madness of crowds and activate their wisdom. At one level I feel a sense of liberation as all these papery prisons, with their bars of printed type, crumple up, and I float up into the glassy empyrean, there to join the angelic hosts of shiny and fluorescent pixels. That there’s no more ego-driven and ego-imprisoned creature than the writer (and by extension the reader) is a truth that’s been acknowledged for a very long time. Before he pronounces that “of the making of many books there is no end,” the Preacher asserts that all is vanity—and he means writerly vanity. Apparently, there’s now an algorithm that parses text for instances of the personal pronoun and can diagnose depression on the basis of its ubiquity. And isn’t it notable that as the literary novel quits center stage of our culture, we hear a frenzied exclamation of “I! I! I!” What can this be, save for McLuhan’s “Gutenberg mind” indulging in its last gasp, as it morphs into a memoir or a work of so-called creative non-fiction.

If the literary novel was the crucible of a certain sort of self-conception, one of autonomy and individuality, then it was equally the reinforcer of alienation and solipsism. I was taught to read by my mother, who, being American, knew all about phonics and flash cards, the trendy knowledge-transfer technologies of the early 1960s. I recall her tracing the shapes of the letters on the velveteen nap of a sofa we had, then erasing them with a sweep of her hand—with the result that I could read by the time I went to kindergarten. So it’s been a long life of the Logos for me—one, I’d estimate, that has placed me in solitary confinement for a quarter century or more, when I add up all that reading and writing.

It was only when finishing this essay that I fully admitted to myself what I’d done: created yet another text that’s an analysis of our emerging BDDM life but that paradoxically requires the most sophisticated pre-BDDM reading skills to fully appreciate it. It’s the same feeling—albeit in diminuendo—as the one I had when I completed the trilogy of novels I’ve been working on for the past eight years, books that attempt to put down on paper what it feels like for human minds to become technologically transformed. I felt like one of those Daffy Ducks who runs full tilt over the edge of a precipice, then hovers for a few seconds in midair (while realization catches up with him), before plummeting to certain death. Look down and you may just see the hole I made when I hit the ground. Look a little more closely, and you may see the one you’re making right now, with the great hydrocephalic cannonball of a Gutenberg mind you’ve used to decipher this piece.

At the end of Bradbury’s Fahrenheit 451, the exiled hoboes return to the cities, which have been destroyed by the nuclear conflicts of the illiterate, bringing with them their head-borne texts, ready to restart civilization. And it’s this that seems to me the most prescient part of Bradbury’s menacing vision. For I see no future for the words printed on paper, or the art forms they enacted, if our civilization continues on this digital trajectory: there’s no way back to the future—especially not through the portal of a printed text.