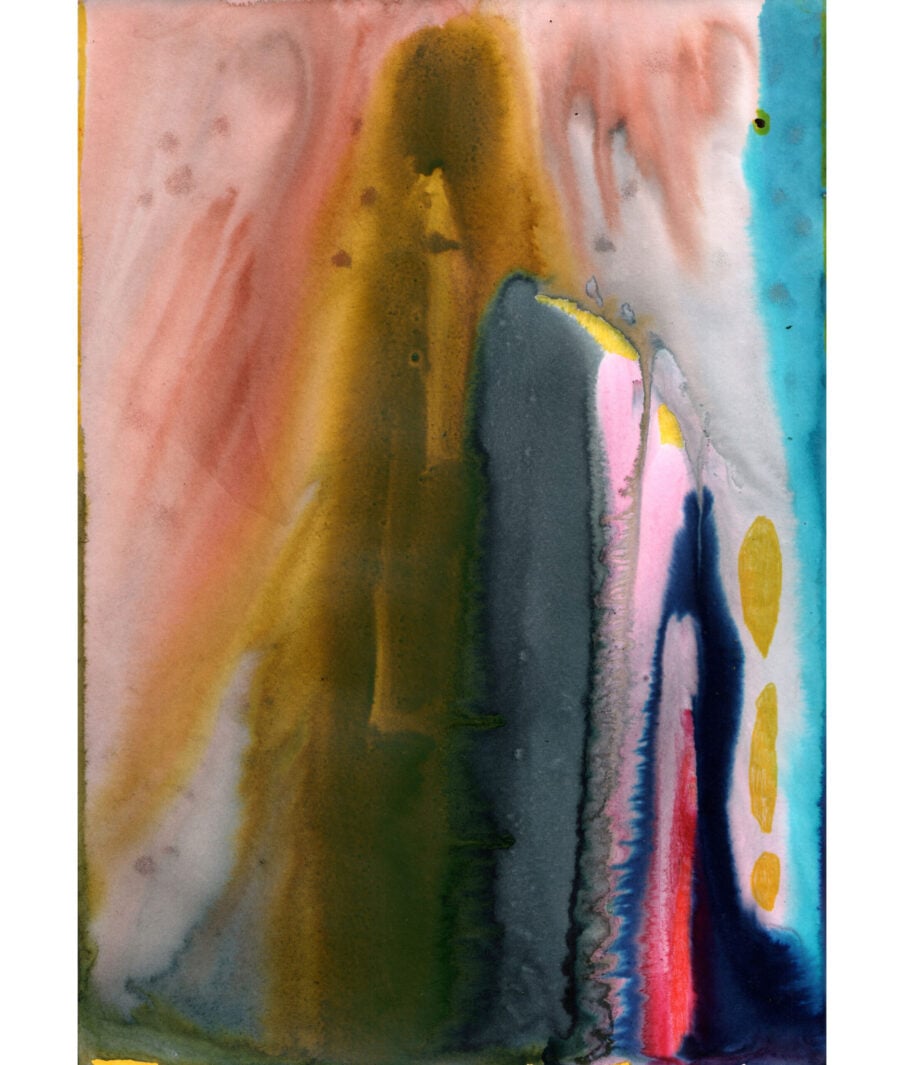

Watercolors by Emma Larsson for Harper’s Magazine. Larsson’s watercolors are responses to poems featured in this essay. This watercolor is a response to the AI continuation of Emily Dickinson’s poem. All paintings © The artist. Courtesy the artist and Simard Bilodeau Contemporary, Los Angeles

“Far as the east from even, / Dim as the border star, / Life is the little creature / That carries the great cigar.” So wrote Emily Dickinson, with some unfortunate help from a computer. As I read that stanza in February 2022, I was more than six months into a scientific experiment I was conducting with my friend and colleague Morten Christiansen, a cognitive psychologist at Cornell, where he and I are professors. In 2021, two years before ChatGPT would become a household name, Christiansen had been impressed by the initial technical descriptions of GPT-3, the recently released version of the generative large language…