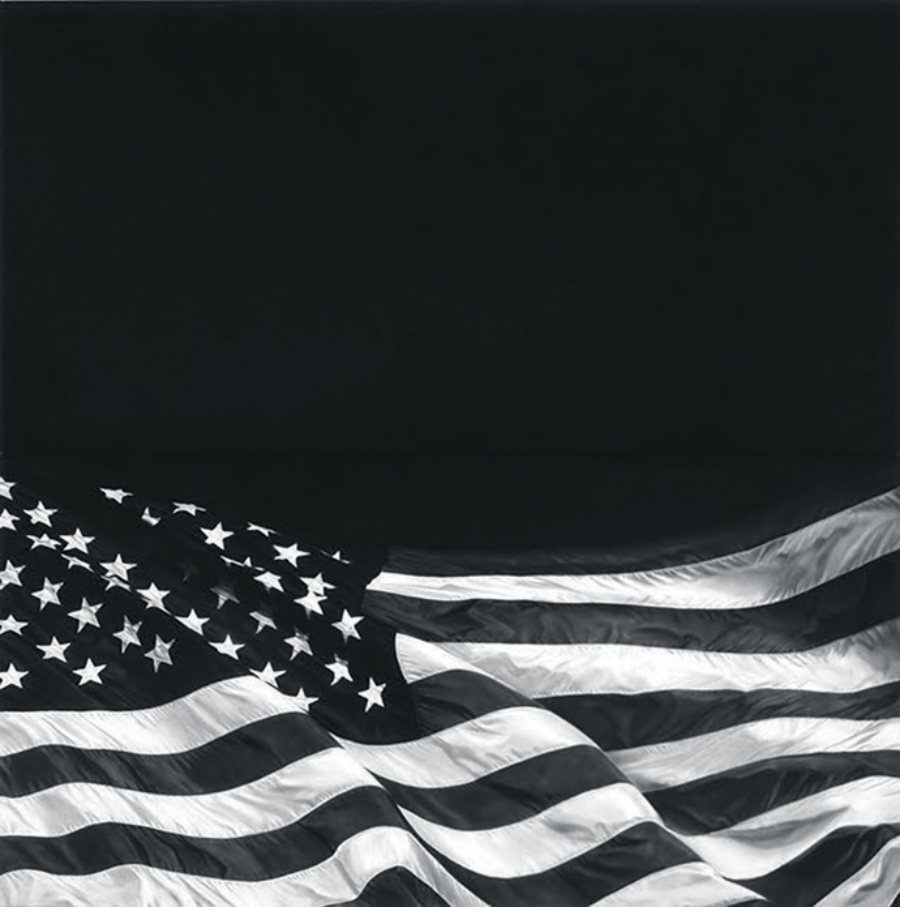

The Last Flag (Dedicated to Howard Zinn), a charcoal drawing by Robert Longo

Courtesy the artist and Metro Pictures, New York City

Addressing the graduating cadets at West Point in May 1942, General George C. Marshall, then the Army chief of staff, reduced the nation’s purpose in the global war it had recently joined to a single emphatic sentence. “We are determined,” he remarked, “that before the sun sets on this terrible struggle, our flag will be recognized throughout the world as a symbol of freedom on the one hand and of overwhelming force on the other.”

At the time Marshall spoke, mere months after the Japanese attack on Pearl Harbor, U.S. forces had sustained a string of painful setbacks and had yet…