What Are You Going to Do With That?

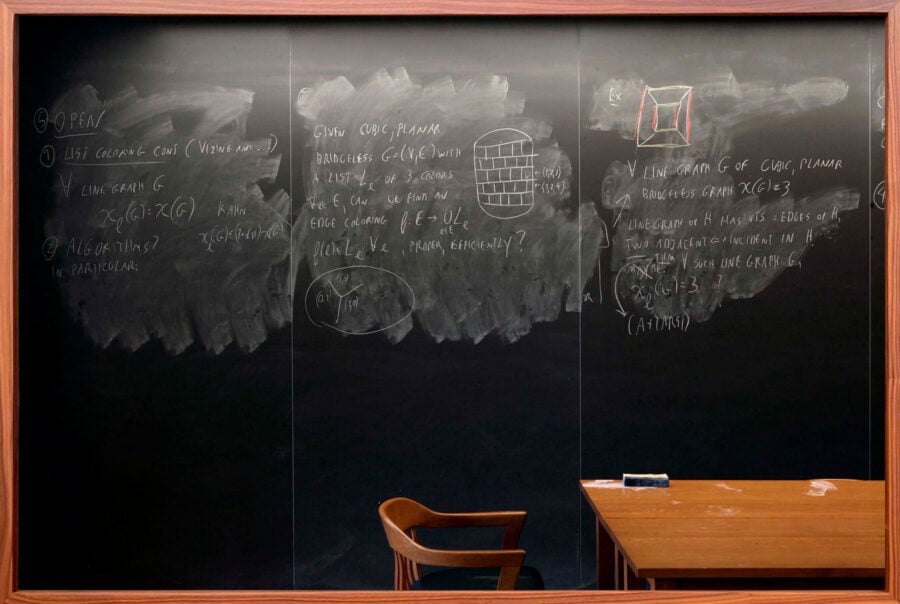

“Noga Alon, Princeton University,” by Jessica Wynne, from the series Do Not Erase © The artist. Courtesy Edwynn Houk Gallery, New York City

Listen to an audio version of this article.

Does anyone believe in college education anymore? Republicans certainly don’t—a mere 19 percent of them expressed “a great deal” or “quite a lot” of confidence in higher education in a Gallup poll last year. But we already knew that. More striking is that Democrats’ confidence is down to 59 percent. Men, women, young people, the middle-aged, and the elderly range from ambivalent about to decidedly wary of today’s colleges and universities. Of college graduates themselves, only 47 percent were able to muster more than “some” confidence in the institutions that minted their credentials. No wonder fewer students are enrolling in college after high school and fewer students who matriculate stay on track to finish their degrees.

On campus, the atmosphere of disillusionment is just as thick—including at elite schools like Harvard, where I teach. College administrators have made it clear that education is no longer their top priority. Teachers’ working conditions are proof of this: more and more college classes, even at the wealthiest institutions, are being taught by underpaid and overworked contingent faculty members. College students appear to have gotten the memo: the amount of time they spend studying has declined significantly over the past half century. “Harvard has increasingly become a place in Cambridge for bright students to gather—that happens to offer lectures on the side,” one undergraduate recently wrote in Harvard Magazine. Survey data suggests that more and more students view college education in transactional terms: an exchange of time and tuition dollars for credentials and social connections more than a site of valuable learning. The Harvard Crimson’s survey of the graduating class of 2024 found that nearly half admitted to cheating, almost twice the figure in last year’s survey, conducted when ChatGPT was still new. The population of cheaters includes close to a third of students with GPAs rounded to 4.0, more than three times what it was just two years ago.

The response to this year’s protests against Israel’s war on Gaza threw our ruling class’s skepticism of higher education into sharp relief. On campus, crackdowns on encampments proved an ideal opportunity for university administrators to vent their pent-up fury against students and faculty alike. Columbia’s quasi-military campaign against its protesters resulted, at its peak, in the near-total shutdown of its campus; meanwhile, my employer’s highest governing authority, the Harvard Corporation, composed largely of ultra-wealthy philanthropists, overruled the school’s Faculty of Arts and Sciences and barred thirteen student activists from graduating, including two Rhodes Scholarship recipients. In the media, many political and economic elites have cheered on the repression of pro-Palestinian speech, revealing in the process their contempt for the very concept of college. “Higher education in four words: Garbage in, garbage out,” the Democratic New York representative Ritchie Torres wrote in a post on Twitter, mocking the research of a Columbia student involved in the school’s encampment. Bill Maher rolled out a symptomatic “New Rule” on his show in late October: “Don’t go to college.”

The fact that even many affluent liberals today don’t think a college education is worthwhile would have perplexed social theorists a generation or two ago. “Universities play a crucial role in the production of labor power, in the reproduction of the class structure, and in the perpetuation of the dominant values of the social order,” the Marxian economists Samuel Bowles and Herbert Gintis wrote in their landmark 1976 work Schooling in Capitalist America. The French sociologist Pierre Bourdieu wagered that the “function of legitimation” performed by the education system—“converting social hierarchies into academic hierarchies”—would become “more and more necessary to the perpetuation of the ‘social order’ ” as the ruling class shifted away from a “crude and ruthless” exercise of its power. And it wasn’t just the radicals who thought this way. Neoliberal economists ensnared the American political establishment in a quest to rectify the “skills gap” allegedly at the heart of economic inequality. “More than ever,” Barack Obama said in 2014, “a college degree is the surest path to a stable middle-class life.”

The political establishment has traveled a long road to get from such bromides to “garbage in, garbage out.” But this shift doesn’t just register a transformation in values or attitudes. It is also a sign that something fundamental has changed about the function of college in the reproduction of social hierarchy—that it may no longer be essential to modern capitalist societies. American economic and political elites did not always rely on colleges to produce their successors. Perhaps there is no reason to think that they will do so in the future.

Many academics—professors and administrators alike—and public commentators underestimate Americans’ growing and broad-based skepticism of a college education. For years, they have been preoccupied with a narrower crisis: students’ disinterest in the humanities. One might suspect, in fact, that in maligning the value of “college,” critics are really speaking of the humanities in code. As Nathan Heller observed last year in The New Yorker, our cultural vision of college often remains populated by tweed-clad quad dwellers perusing James Joyce (or perhaps Judith Butler), even if we may understand that science and technology dominate education and research at today’s large universities. Perhaps the task for schools, then, is to signal more emphatically their distance from this outdated humanistic image—to codify the de facto status of STEM as the center of gravity of higher ed. Humanities departments might even benefit from defining themselves explicitly as ancillaries to STEM. Heller notes that the number of majors in my department, History of Science, has increased significantly in recent years as the number of humanities majors has plunged elsewhere at Harvard; at Arizona State University, business majors can concentrate in “language and culture.” The arcane rewards of humanistic study may no longer suffice as an answer for what a college education is good for, but it’s not too late to formulate new answers.

When was the last time people actually went to college because they thought reading old books was its own reward? If the humanities have indeed expired, it was a rather protracted death. Heller dates the start of the enrollment plunge to around 2012; two years earlier, Martha Nussbaum warned that the arts and humanities, as well as “the humanistic aspects of science and social science,” were “losing ground” worldwide. Two years before that, the former Yale English professor William Deresiewicz made waves with a viral essay, the impetus for his later book Excellent Sheep, that bemoaned the eclipse of “the humanistic ideal in American colleges.” In 1987, Allan Bloom claimed in The Closing of the American Mind that students had by then “lost the practice of and the taste for reading,” a development he argued had begun—along with a new spirit of intolerance toward free thought on campus—by the late Sixties. In 1956, the journalist William H. Whyte complained, in his best-selling jeremiad The Organization Man, that engineering- and business-education boosters were striving self-consciously to marginalize the humanities, despite the latter’s already “dismal” enrollment numbers. Whyte attributed the rise of the organization man’s ethos in part on the influence of the pragmatist philosophy of John Dewey and William James. But in 1903, in the infancy of the American research university, James had himself warned of the dangers of the “Ph.D. Octopus,” which threatened, he thought, to strangle the spirit of humanistic inquiry and “substance” with the tentacles of “vanity and sham.”

Of all these prophets, James, a philosophy and psychology professor at Harvard during the Gilded Age and the son of a wealthy Northeastern theologian, had perhaps the greatest claim to actually having witnessed humanistic education in its antediluvian era. Its mission was to mold men of his social station. In the late nineteenth century, the bourgeoisie that emerged triumphant from the Civil War—the WASP elite, as it would later be known—consolidated around a standard educational sequence for its scions. They would start off at a private boarding school, then head to an elite college, preferably Yale, Princeton, or Harvard. Along the way, they’d study the liberal arts: subjects such as the Greek and Roman classics, philosophy, literature, mathematics, natural history, and astronomy. The purpose of this curriculum, wrote The Nation’s founding editor, Edwin L. Godkin, was to enable the youngest members of the elite to “start on their careers with a common stock of traditions, tastes and associations.” Such education also prepared young American aristocrats to rule by equipping them with discipline and character. There was a solid economic logic to such notions. Until the early twentieth century, the expansion of industrial capitalism depended on the continual reinvestment of profit—which in turn required a firm sense of duty and purpose, to ward against the temptation to squander fortunes on idle luxuries.

By the time James expressed his concerns about the Ph.D. Octopus, the structure of American capitalism was undergoing a major evolution. It became clear that a significant source of growth was technical innovation, not merely saving and investing. This development vastly enhanced the prestige of the sciences—including the social sciences—as well as engineering among the WASP elite. By the interwar period, as the historian Andrew Jewett has shown, general education programs at schools like Columbia and Harvard increasingly emphasized a “scientific attitude” over familiarity with classical philosophy and literature. At the same time, the need of American corporations for technically skilled workers—to design new products and production processes and to coordinate the flow of information across sprawling organizations—increased precipitously, and a much wider stratum of the American populace went to college to become the organization men that Whyte examined.

But Whyte’s foreboding was premature. A variety of countervailing forces buoyed the study of literature and history enough to produce what many humanists today now regard as a golden age. The Cold War’s military-industrial capitalism found new uses for the humanities and the social sciences, whether in analyses of foreign cultures or in artistic advertisements for the rewards of American freedom. At the same time, the diversification of the academy created a social foundation for activist movements that demanded the production of new critical-humanistic knowledge—knowledge about the inner workings of capitalism, racism, imperialism, and the patriarchy, and how best to challenge them. Perhaps most significantly, humanistic training retained a place in shaping the creative, free-thinking, and adaptable minds that the midcentury university promised to produce. The task of the modern research university, wrote the influential former University of California system president Clark Kerr, was to supplant the “monistic” university, with its devotion to a single purpose or faith. These outmoded institutions were

more static in a dynamic world, more intolerant in a world crying for understanding and accommodation to diversity, more closed to the unorthodox person and idea, more limited in their comprehension of total reality.

These vices would exact a material as well as a spiritual cost on students entering a volatile, rapidly changing job market. “Since we live in an age of innovation, a practical education must prepare a man for work that does not yet exist and cannot yet be clearly defined,” the influential business writer and consultant Peter Drucker asserted in 1959. Mere technical training, no matter what critics like Whyte feared, was insufficient, even from a ruthlessly instrumental perspective. It still paid to be able to think.

The fault lines that emerged in higher education in the postwar decades still shape the landscape of academia today. Activists on both the right and the left have kept visions of more dramatic transformations alive: on the one hand, a return to classical education aimed at strengthening the moral fiber of the elite, and on the other, the creation of a truly democratic university allied with popular movements for social change. But just as today’s furor over “wokeness” on college campuses mirrors the “political correctness” Kulturkampf of the Nineties and arguments about the purpose of higher education in the Sixties, today’s debates about the future of the humanities in the STEM-oriented university follow the same beats they have since the early twentieth century. “What [the humanities] does is teach you not a particular skill or technology, but to think and question,” the Indian billionaire Anand Mahindra said in 2010, explaining his decision to make a $10 million gift to what is now the Mahindra Humanities Center at Harvard. The biologist Joseph L. Graves Jr., meanwhile, proclaimed in 2021 that “America needs to be willing to pay . . . to build out STEM education infrastructure, so that we can produce the number of STEM professionals we need going forward.”

This static back-and-forth, however, plays out in an economic landscape that has continued to transform. Word is starting to get out that STEM programs have just as much trouble justifying their worth as the humanities do. As noted by the UC San Diego sociologist John D. Skrentny, author of last year’s Wasted Education: How We Fail Our Graduates in Science, Technology, Engineering, and Math, just over a quarter of STEM graduates actually go on to work in STEM jobs—about half of them in the computer field. In 2022, the University of Vermont folded its geology department into a new Department of Geography and Geosciences. West Virginia University discontinued its graduate programs in mathematics in 2023. As Francesca Mari recently reported in the New York Times, undergraduates at elite universities are steadily preparing for a narrowing window of careers: those in finance and consulting, rather than in jobs for which a STEM degree is a prerequisite. “If you’re not doing finance or tech, it can feel like you’re doing something wrong,” testifies one of her informants, a Harvard undergraduate who switched his major from environmental engineering to economics. (In the late 2010s, some colleges started classifying economics as a STEM major, ultimately preserving the case for STEM by altering the terms of the debate.)

As that student’s pivot suggests, “tech” today is more of an appendage to finance than an alternative to it. “Going into tech” does not mean getting an engineering job at a large manufacturing corporation. It means, archetypally, winning venture-capital investment for a startup. This does not necessarily require a STEM degree—or any degree, for that matter, as the examples of Bill Gates, Steve Jobs, and Mark Zuckerberg testify. Airbnb’s co-founders met at the Rhode Island School of Design. The billionaire Pinterest co-founder Evan Sharp has (gasp!) a history degree. “There’s no need even to have a college degree at all, or even high school,” Elon Musk claimed in 2014, discussing his approach to hiring at Tesla. Since 2011, Peter Thiel—a philosophy major and a lawyer by training—has funded a fellowship program that exemplifies the tech world’s cult of the dropout, giving $100,000 grants to “young people who want to build new things instead of sitting in a classroom,” as the program’s website puts it. To the extent that Goldman Sachs CEO David “DJ D-Sol” Solomon has applied what he learned in college to his climb to the pinnacle of Wall Street, it seems more likely to have been the lessons he picked up as a chair of his fraternity than anything on the syllabus in his political science classes. Deals, as one wealthy businessman devoid of technical expertise famously observed, are an art form.

Of course, most fraternity presidents do not end up becoming CEOs of investment banks. Solomon also got lucky, which is what today’s college students are hoping will happen to them when they declare their intention to go into finance or tech. They are betting their futures on the chance that their Wall Street internship or dorm-room startup will lead them to a million- or billion-dollar bonanza. “There’s this idea that you either make it or you don’t, so you better make it,” as a psychologist interviewed by Mari for the Times described the attitudes of Gen Z students. That is a judgment with a fair bit of evidence on its side. A 2020 Brookings Institution report found that the college-educated population is no exception to a wider explosion of middle-class precarity in a winner-take-all economic hierarchy. Plenty of college students who start off middle-class still get rich, but “the share of families with a college-educated head who fall down and out of the middle class”—into the bottom quintile of income—has risen significantly in the past forty years.

Meanwhile, those who do get rich these days, regardless of their educational background, do so largely by owning appreciating assets such as stocks and real estate. Today even corporate executives acquire the lion’s share of their wealth through capital gains on stock options rather than through their salaries (a good deal for them, since the stock market has risen more swiftly than the economy overall). As Melinda Cooper summarizes in her new book, Counterrevolution: Extravagance and Austerity in Public Finance,

the transition that is perhaps too crudely referred to as “financialization” is one that turned asset price movements into the chief determinant of income and wealth shares across the economy.

That transition was deliberately engineered by public-finance policymakers in the past half century, through arcane changes in the tax code and monetary interventions designed to ensure steady inflation in asset prices (while, as a side effect, accelerating deindustrialization elsewhere in the economy).

The asset economy, Cooper shows, helped to restore the significance of dynastic wealth to American capitalism—resituating the family at the center of economic life by more firmly tethering prosperity to inheritance. But an economy in which the best way to get rich is to be born rich is one in which the value proposition of a college education is hazy no matter what you intend to study. The educational reformers who best understand how capitalism has changed in recent decades are not the STEM boosters, reheating a vision of the knowledge economy that is far staler than most of them realize. Rather, they are conservatives like the former Nebraska senator Ben Sasse, who has a Ph.D. in history from Yale and who was appointed president of the University of Florida in 2023, amid Ron DeSantis’s war against wokeness in education. In a December opinion piece in The Atlantic, Sasse assailed the the moral decline of elite universities, a headline that elucidates the conservative vision of the future of higher education as a finishing school for our new aristocracy—the moral tutelage emphasized by the old WASP elite refashioned for the children of real estate developers and fast-food franchise emperors—all those young people whose trust funds moot the question of future employability.

But while plenty of American scions will continue to go to college for fun or for Wall Street connections or because their parents make them, it is not clear that the gentry of the asset economy will feel the same need for moral cultivation that possessed their nineteenth-century predecessors. Personal thrift is less essential to their continued fortunes, and their working-class subordinates, despite some hopeful signs in recent years, are considerably more quiescent than the revolutionaries who drove Gilded Age capitalists to develop an interest in industrial statesmanship. “The educational system fosters and reinforces the belief that economic success depends essentially on the possession of technical and cognitive skills,” Bowles and Gintis wrote in Schooling in Capitalist America, “skills which it is organized to provide in an efficient, equitable, and unbiased manner on the basis of meritocratic principle.” Such a belief was, of course, useful for the ruling class to cultivate, but it is perhaps less essential than it seemed from the vantage point of the Seventies. A seemingly inexhaustible flood of recent books debunking the myth of meritocracy has thus far left the rule of capital undisturbed. The crude and ruthless, in Bourdieu’s words, seems to serve today’s elites just fine.