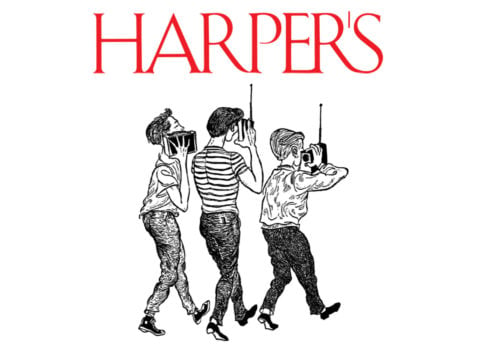

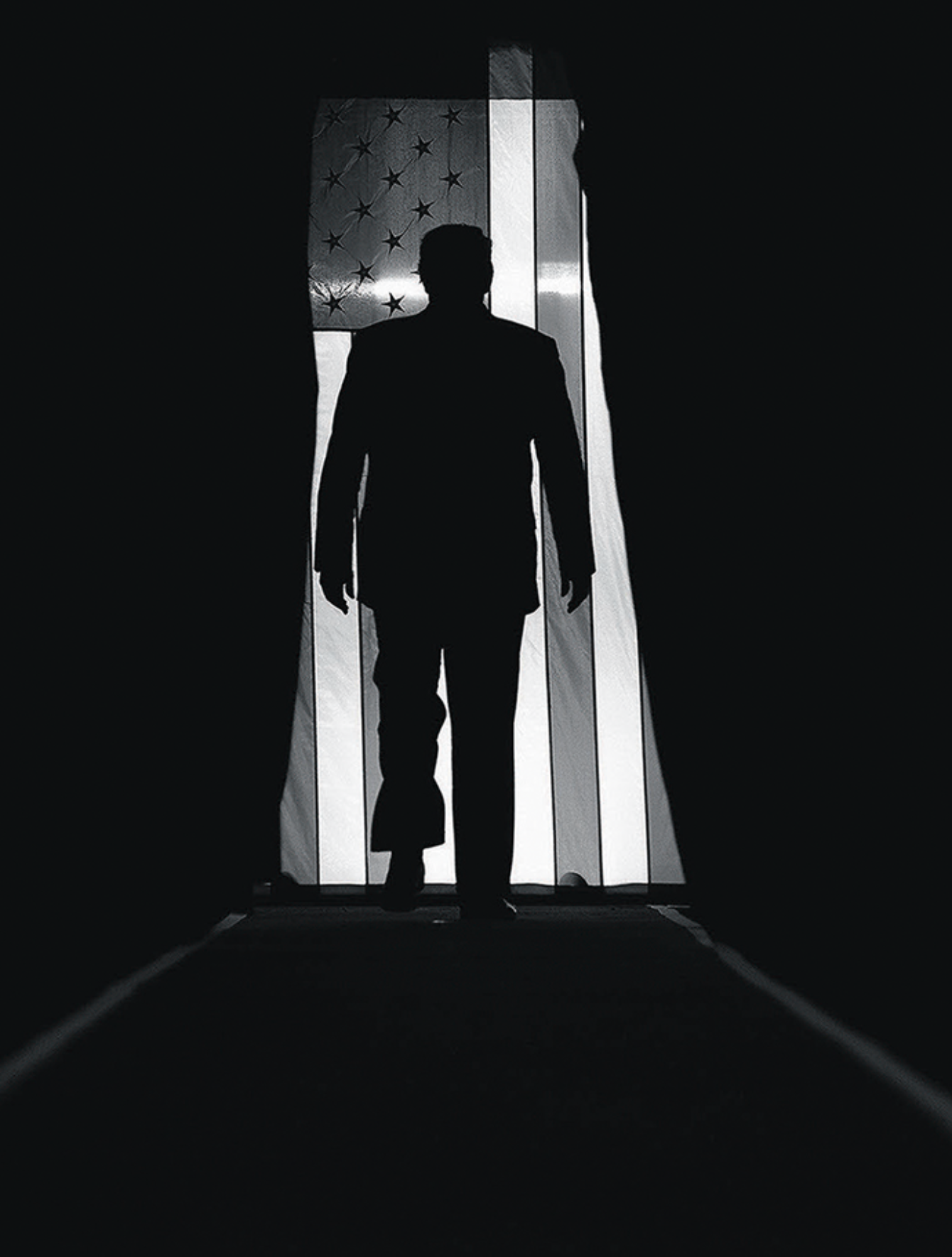

The Last Flag (Dedicated to Howard Zinn), a charcoal drawing by Robert Longo

Courtesy the artist and Metro Pictures, New York City

Addressing the graduating cadets at West Point in May 1942, General George C. Marshall, then the Army chief of staff, reduced the nation’s purpose in the global war it had recently joined to a single emphatic sentence. “We are determined,” he remarked, “that before the sun sets on this terrible struggle, our flag will be recognized throughout the world as a symbol of freedom on the one hand and of overwhelming force on the other.”

At the time Marshall spoke, mere months after the Japanese attack on Pearl Harbor, U.S. forces had sustained a string of painful setbacks and had yet to win a major battle. Eventual victory over Japan and Germany seemed anything but assured. Yet Marshall was already looking beyond the immediate challenges to define what that victory, when ultimately— and, in his view, inevitably—achieved, was going to signify.

This second world war of the twentieth century, Marshall understood, was going to be immense and immensely destructive. But if vast in scope, it would be limited in duration. The sun would set; the war would end. Today no such expectation exists. Marshall’s successors have come to view armed conflict as an open-ended proposition. The alarming turn in U.S.–Iranian relations is another reminder that war has become normal for the United States.

The address at West Point was not some frothy stump speech by a hack politician. Marshall was a deliberate man who chose his words carefully. His intent was to make a specific point: the United States was fighting not to restore peace—a word notably absent from his remarks—nor merely to eliminate an isolated threat. The overarching American aim was preeminence, both ideological and military: as a consequence of the ongoing war, America was henceforth to represent freedom and power—not in any particular region or hemisphere but throughout the world. Here, conveyed with crisp military candor, was an authoritative reframing of the nation’s strategic ambitions.*

Marshall’s statement captured the essence of what was to remain America’s purpose for decades to come, until the presidential election of 2016 signaled its rejection. That year an eminently qualified candidate who embodied a notably bellicose variant of the Marshall tradition lost to an opponent who openly mocked that tradition while possessing no qualifications for high office whatsoever.

Determined to treat Donald Trump as an unfortunate but correctable aberration, the foreign-policy establishment remains intent on salvaging the tradition that Marshall inaugurated back in 1942. The effort is misguided and will likely prove futile. For anyone concerned about American statecraft in recent years, the more pressing questions are these: first, whether an establishment deeply imbued with Marshall’s maxim can even acknowledge the magnitude of the repudiation it sustained at the hands of Trump and those who voted him into office (a repudiation that is not lessened by Trump’s failure to meet his promises to those voters); and second, whether this establishment can muster the imagination to devise an alternative tradition better suited to existing conditions while commanding the support of the American people. On neither score does the outlook appear promising.

General George C. Marshall at the headquarters of the War Department, 1943 © Bettmann/Getty Images

General Marshall delivered his remarks at West Point in a singular context. Marshall gingerly referred to a “nationwide debate” that was complicating his efforts to raise what he called “a great citizen-army.” The debate was the controversy over whether the United States should intervene in the ongoing European war. To proponents of intervention, the issue at hand during the period of 1939 to 1941 was the need to confront the evil of Nazism. Opponents of intervention argued in the terms of a quite different question: whether or not to resume an expansionist project dating from the founding of the Republic. This dispute and its apparent resolution, misunderstood and misconstrued at the time, have been sources of confusion ever since.

Even today, most Americans are only dimly aware of the scope—one might even say the grandeur—of our expansionist project, which stands alongside racial oppression as an abiding theme of the American story. As far back as the 1780s, the Northwest Ordinances, which created the mechanism to incorporate the present-day Midwest into the Union, had made it clear that the United States had no intention of confining its reach to the territory encompassed within the boundaries of the original thirteen states. And while nineteenth-century presidents did not adhere to a consistent grand plan, they did pursue a de facto strategy of opportunistic expansion. Although the United States encountered resistance during the course of this remarkable ascent, virtually all of it was defeated. With the notable exception of the failed attempt to annex Canada during the War of 1812, expansionist efforts succeeded spectacularly and at a remarkably modest cost to the nation. By midcentury, the United States stretched from sea to shining sea.

Generations of Americans chose to enshrine this story of westward expansion as a heroic tale of advancing liberty, democracy, and civilization. Although that story certainly did include heroism, it also featured brute force, crafty maneuvering, and a knack for striking a bargain when the occasion presented itself.

In the popular imagination, the narrative of “how the West was won” to which I was introduced as a youngster has today lost much of its moral luster. Yet the country’s belated pangs of conscience have not induced any inclination to reapportion the spoils. While the idea of offering reparations to the offspring of former slaves may receive polite attention, no one proposes returning Florida to Spain, Tennessee and Georgia to the Cherokees, or California to Mexico. Properties seized, finagled, extorted, or paid for with cold, hard cash remain American in perpetuity.

Battlefield memorial for a dead U.S. soldier, Normandy, France, 1944 (detail)

Back in 1899, the naturalist, historian, politician, sometime soldier, and future president Theodore Roosevelt neatly summarized the events of the century then drawing to a close: “Of course our whole national history has been one of expansion.” When T.R. uttered this truth, a fresh round of expansionism was under way, this time reaching beyond the fastness of North America into the surrounding seas and oceans. The United States was joining with Europeans in a profit-motivated intercontinental imperialism.

The previous year, U.S. forces had invaded and occupied Cuba, Puerto Rico, Guam, and the Pacific island of Luzon, and annexed Hawaii as an official territory. Within the next two years, the Stars and Stripes was flying over the entire Philippine archipelago. Within four years, with Roosevelt now in the White House, American troops arrived to garrison the Isthmus of Panama, where the United States, employing considerable chicanery, was setting out to build a canal. Thereafter, to preempt any threats to that canal and other American business interests, successive U.S. administrations embarked on a series of interventions throughout the Caribbean. Roosevelt, William Howard Taft, and Woodrow Wilson had no desire to annex Nicaragua, Haiti, and the Dominican Republic; they merely wanted the United States to control what happened in those small countries, as it already did in nearby Cuba. Though President Trump’s recent bid to purchase Greenland from Denmark may have failed, Wilson—perhaps demonstrating greater skill in the art of the deal—did persuade the Danes in 1917 to part with the Danish West Indies (now the U.S. Virgin Islands) for the bargain price of $25 million. At least until Trump moved into the White House, Wilson’s purchase of the Virgin Islands appeared to have sated the American appetite for territorial acquisition. With that purchase, the epic narrative of a small republic becoming an imperial behemoth concluded and was promptly filed away under the heading of Destiny, manifest or otherwise—a useful turn, since Americans were and still are disinclined to question those dictates of God or Providence that work to their benefit.

Yet rather than accept the nation’s fate as achieved, President Wilson radically reconfigured American ambitions. Expansion was to continue but was henceforth to emphasize hegemony rather than formal empire. This shift included a seldom-noticed racial dimension. Prior to 1917, the United States had mostly contented itself with flexing its muscles among non-white peoples. Wilson sought to encroach into an arena where the principal competitors were white. Pacifying “little brown brothers” (Taft’s disparaging term for Filipinos) was like playing baseball in Rochester or Pawtucket. Now the United States was ready to break into the big leagues.

For Americans today, it is next to impossible to appreciate the immensity of the departure from tradition that President Wilson engineered in 1917. Until that year, steering clear of foreign rivalries had constituted a sacred precept of American statecraft. The barrier of the Atlantic was sacrosanct, to be breached by merchants but not by soldiers.

“The great rule of conduct for us in regard to foreign nations,” George Washington had counseled in his Farewell Address, “is in extending our commercial relations, to have with them as little political connection as possible.” This dictum had applied in particular to U.S. relations with Europe. Washington had explicitly warned against allowing the United States to be dragged into “the ordinary vicissitudes of her politics, or the ordinary combinations and collisions of her friendships or enmities.”

The Great War persuaded President Wilson to disregard Washington’s advice. At the war’s outset, in 1914, Wilson had declared that the United States would be “neutral in fact, as well as in name.” In reality, as the conflict settled into a bloody stalemate, his administration tilted in favor of the Allies. By the spring of 1917, with Germany having renewed U-boat attacks on U.S. shipping, he tilted further, petitioning Congress to declare war on the Reich. Congress complied, and in short order over a million doughboys were headed to the Western Front. In terms of sheer roll-the-dice boldness, Wilson’s decision to go to war against Germany dwarfs Lyndon Johnson’s 1965 escalation of the Vietnam War and George W. Bush’s 2003 invasion of Iraq. It was an action utterly without precedent.

And as with Vietnam and Iraq, the results were costly and disillusioning. Although U.S. forces entered the fight in large numbers only weeks before the armistice in November 1918, American deaths exceeded 116,000—this when the total U.S. population was less than one third what it is today. Happy to accept American help in defeating the Hun, British and French leaders wasted little time once the fighting had stopped in rejecting Wilson’s grandiose vision of a peaceful world order based on his famous Fourteen Points. By the time the U.S. Senate refused to ratify the Versailles Treaty, in November 1919 and again in March 1920, it had become evident that Wilson’s stated war aims would remain unfulfilled. In return for rallying to the Allies in their hour of need, the United States had gained precious little. In Europe itself, meanwhile, the seeds of further conflict were already being planted.

A deeply disenchanted American public concluded, not without reason, that the U.S. entry into the war had been a mistake, an assessment that found powerful expression in the fiction of Ernest Hemingway, John Dos Passos, and other interwar writers. The man who followed Wilson in the White House, Warren G. Harding, agreed. The principal lesson to be drawn from the war, “ringing” in his ears, “like an admonition eternal, an insistent call,” Harding declared, was, “It must not be again!” His was not a controversial judgment. During the 1920s, therefore, George Washington’s charge to give Europe wide berth found renewed favor. According to legend, the United States then succumbed to two decades of unmitigated isolationism.

U.S. soldiers in Afghanistan, 2004 (detail) © Moises Saman/Magnum Photos

The truth, as George Marshall and his military contemporaries knew, was more complicated. From 1924 to 1927, Colonel Marshall was stationed in Tianjin, China, where he commanded the 15th Infantry Regiment. The year 1924 found Brigadier General Douglas MacArthur presiding over the U.S. Army’s Philippine Division, with headquarters at Manila’s Fort Santiago. Around the same time, Dwight D. Eisenhower was serving in Panama while George S. Patton was assigned to the Hawaiian Division, at Schofield Barracks. Matthew Ridgway’s duty stations between the world wars included stints in China, Nicaragua, and the Philippines. In 1935, MacArthur returned to Manila for another tour, this time bringing Ike along as a member of his staff.

The pattern of assignments of these soon-to-be-famous officers was not atypical. During the period between the two world wars, the Army kept busy policing outposts of the American empire. The Navy and Marine Corps shouldered similar obligations: to maintain its “Open Door” policy, the United States deployed a small flotilla of warships at its “China Station,” headquartered in Shanghai, for example, while contingents of U.S. Marines enforced order across the Caribbean. Marine Major General Smedley Butler achieved immortality by confessing that he had spent his career as “a high class muscle-man for Big Business, for Wall Street and for the Bankers.” While hyperbolic, Butler’s assessment was not altogether wrong.

It isn’t possible to square the deployment of U.S. forces everywhere from the Yangtze and Manila to Guantánamo and Managua with any plausible definition of isolationism. While the United States did pull its troops out of Europe after 1918, it maintained its empire. So the abiding defect of U.S. policy during the interwar period was not a head-in-the-sand penchant for ignoring the world. The actual problem was overstretch compounded by indolence. Decision-makers had abdicated their responsibility to align means and ends, even as the world drifted toward the precipice of another horrific conflict.

Nonetheless, those who advocated going to war against Germany in the “nationwide debate” to which Marshall alluded in his West Point speech charged their adversaries with being “isolationists.” The tag resonated—though, in point of fact, isolationism no more accurately described U.S. foreign policy at the time than it does that of the Trump Administration today. (And President Trump is no more an isolationist than he is a Presbyterian.)

Proponents of intervention between 1939 and 1941 were diverting attention from the real issue, which was a debate on whether to remember or to forget. To go to war with Germany a second time meant swallowing the bitter disappointments wrought by having done so just two decades before.

The interventionist case came down to this: given the enormity of the Nazi threat, it was incumbent on Americans to get over their vexations with the recent past. It was time to get back to work. For their part, the anti-interventionists were disinclined to forget. They believed that the Allies had taken the United States to the cleaners—as indeed they had—and they did not intend to repeat the experience. Anti-interventionists insisted that fulfilling the American appetite for liberty and abundance did not require further expansion. They believed that the domain the United States had already carved out in the Western Hemisphere was sufficient to satisfy the aspirations specified in the Preamble to the Constitution. Expansion, in their view, had gone far enough. In December 1941, Adolf Hitler settled this issue, seemingly for good, when he declared war on the United States after the Japanese attacked Pearl Harbor. The Nazi dictator effectively wiped the slate clean, rendering irrelevant all that had occurred since 1917, including Marshall’s own prior service on the Western Front and in the outer provinces of the American imperium. Thanks to Hitler, the path forward seemed clear. Only one thing was needed: the mobilization of Marshall’s “great citizen-army” as an expression of both freedom and power.

This is the path that the United States has followed, with only occasional deviations and backslides, ever since. As if by default, therefore, Marshall’s dictum of preeminence has remained the implicit premise of the American grand strategy: a stubborn insistence that freedom is ours to define and that America’s possession of (and willingness to use) overwhelming force offers the best way to ensure freedom’s triumph, if only so-called isolationists would get out of the way. So nearly eighty years later, we are still stuck in Marshall’s world, with Marshall himself the unacknowledged architect of all that was to follow.

An explosion on the U.S.S. Shaw during the attack on Pearl Harbor, December 7, 1941. Courtesy the George Eastman Museum

In our so-called Trump Era, freedom and power aren’t what they used to be. Both are undergoing radical conceptual transformations. Marshall assumed a mutual compatibility between the two. No such assumption can be made today.

Although the strategy of accruing overwhelming military might to advance the cause of liberty persisted throughout the period misleadingly enshrined as the Cold War, it did so in attenuated form. The size and capabilities of the Red Army, exaggerated by both Washington and the Kremlin, along with the danger of nuclear Armageddon, by no means exaggerated, suggested the need for the United States to exercise a modicum of restraint. Even so, Marshall’s pithy statement of intent more accurately represented the overarching intent of U.S. policy from the late 1940s through the 1980s than any number of presidential pronouncements or government-issued manifestos. Even in a divided world, policymakers continued to nurse hopes that the United States could embody freedom while wielding unparalleled power, admitting to no contradictions between the two.

With the end of the Cold War, Marshall’s axiom came roaring back in full force. In Washington, many concluded that it was time to pull out the stops. Writing in Foreign Affairs in 1992, General Colin Powell, arguably the nation’s most highly respected soldier since Marshall, anointed America “the sole superpower” and, quoting Lincoln, “the last best hope of earth.” Civilian officials went further, designating the United States as history’s “indispensable nation.” Supposedly uniquely positioned to glimpse the future, America took it upon itself to bring that future into being, using whatever means it deemed necessary. During the ensuing decade, U.S. troops were called upon to make good on such claims in the Persian Gulf, the Balkans, and East Africa, among other venues. Indispensability imposed obligations, which for the moment at least seemed tolerable.

After 9/11, this post–Cold War posturing reached its apotheosis. Exactly sixty years after Marshall’s West Point address, President George W. Bush took his own turn in speaking to a class of graduating cadets. With splendid symmetry, Bush echoed and expanded on Marshall’s doctrine, declaring, “Wherever we carry it, the American flag will stand not only for our power, but for freedom.” Yet something essential had changed. No longer content merely to defend against threats to freedom—America’s advertised purpose in World War II and during the Cold War—the United States was now going on the offensive. “In the world we have entered,” Bush declared, “the only path to safety is the path of action. And this nation will act.” The president thereby embraced a policy of preventive war, as the Japanese and Germans had, and for which they landed in the dock following World War II. It was, in effect, Marshall’s injunction on steroids.

We are today in a position to assess the results of following this “path of action.” Since 2001, the United States has spent approximately $6.5 trillion on several wars, while sustaining some sixty thousand casualties. Post-9/11 interventions in Afghanistan, Iraq, and elsewhere have also contributed directly or indirectly to an estimated 750,000 “other” deaths. During this same period, attempts to export American values triggered a pronounced backlash, especially among Muslims abroad. Clinging to Marshall’s formula as a basis for policy has allowed the global balance of power to shift in ways unfavorable to the United States.

At the same time, Americans no longer agree among themselves on what freedom requires, excludes, or prohibits. When Marshall spoke at West Point back in 1942, freedom had a fixed definition. The year before, President Franklin Roosevelt had provided that definition when he described “four essential human freedoms”: freedom of speech, freedom of worship, freedom from want, and freedom from fear. That was it. Freedom did not include equality or individual empowerment or radical autonomy.

As Army chief of staff, Marshall had focused on winning the war, not upending the social and cultural status quo (hence his acceptance of a Jim Crow army). The immediate objective was to defeat Nazi Germany and Japan, not to subvert the white patriarchy, endorse sexual revolutions, or promote diversity.

Further complicating this ever-expanding freedom agenda is another factor just now beginning to intrude into American politics: whether it is possible to preserve the habits of consumption, hypermobility, and self-indulgence that most Americans see as essential to daily existence while simultaneously tackling the threat posed by human-induced climate change. For Americans, freedom always carries with it expectations of more. It did in 1942, and it still does today. Whether more can be reconciled with the preservation of the planet is a looming question with immense implications.

When Marshall headed the U.S. Army, he was oblivious to such concerns in ways that his latter-day successors atop the U.S. military hierarchy cannot afford to be. National security and the well-being of the planet have become inextricably intertwined. In 2010, Admiral Michael Mullen, chairman of the Joint Chiefs of Staff, declared that the national debt, the prime expression of American profligacy, had become “the most significant threat to our national security.” In 2017, General Paul Selva, Joint Chiefs vice chair, stated bluntly that “the dynamics that are happening in our climate will drive uncertainty and will drive conflict.”

As for translating objectives into outcomes, Marshall’s “great citizen-army” is long gone, probably for good. The tradition of the citizen-soldier that Marshall considered the foundation of the American military collapsed as a consequence of the Vietnam War. Today the Pentagon relies instead on a relatively small number of overworked regulars reinforced by paid mercenaries, aka contractors. The so-called all-volunteer force (AVF) is volunteer only in the sense that the National Football League is. Terminate the bonuses that the Pentagon offers to induce high school graduates to enlist and serving soldiers to re-up, and the AVF would vanish.

Furthermore, the tasks assigned to these soldiers go well beyond simply forcing our adversaries to submit, which was what we asked of soldiers in World War II. Since 9/11, those tasks include something akin to conversion: bringing our adversaries to embrace our own conception of what freedom entails, endorse liberal democracy, and respect women’s rights. Yet to judge by recent wars in Iraq (originally styled Operation Iraqi Freedom) and Afghanistan (for years called Operation Enduring Freedom), U.S. forces are not equipped to accomplish such demanding work.

Donald Trump at a rally in Lake Charles, Louisiana, 2019 © Saul Loeb/AFP/Getty Images

This record of non-success testifies to the bind in which the United States finds itself. Saddled with outsized ambitions dating from the end of the Cold War, confronted by dramatic and unanticipated challenges, and stuck with instruments of power ill-suited to existing and emerging requirements, and led by a foreign-policy establishment that suffers from terminal inertia, the United States has lost its strategic bearings.

Deep in denial, that establishment nonetheless has a ready-made explanation for what’s gone wrong: as in the years from 1939 to 1941, so too today a putative penchant for isolationism is crippling U.S. policy. Isolationists are ostensibly preventing the United States from getting on with the business of amassing power to spread freedom, as specified in Marshall’s doctrine. Consider, if you will, the following headlines dating from before Trump took office: “Isolationism Soars Among Americans” (2009); “American isolationism just hit a fifty-year high” (2013); “America’s New Isolationism” (2013, twice) “Our New Isolationism” (2013); “The New American Isolationism” (2014); “American Isolationism Is Destabilizing the World,” (2014); “The Revival of American Isolationism” (2016). And let us not overlook “America’s New Isolationists Are Endangering the West,” penned in 2013 by none other than John Bolton, Trump’s recently cashiered national security adviser.

Note that when these essays appeared U.S. military forces were deployed in well over one hundred countries around the world and were actively engaged in multiple foreign wars. The Pentagon’s budget easily dwarfed that of any plausible combination of rivals. If this fits your definition of isolationism, then you might well believe that President Trump is, as he claims, “the master of the deal.” All the evidence proves otherwise.

Isolationism is a fiction, bandied about to divert attention from other issues. It is a scare word, an egregious form of establishment-sanctioned fake news. It serves as a type of straitjacket, constraining debate on possible alternatives to militarized American globalism, which has long since become a source of self-inflicted wounds.

Only when foreign-policy elites cease to cite isolationism to explain why the “sole superpower” has stumbled of late will they be able to confront the issues that matter. Ranking high among those issues is an egregious misuse of American military power and an equally egregious abuse of American soldiers. Confronting the vast disparity between U.S. military ambitions since 9/11 and the results actually achieved is a necessary first step toward devising a serious response to Donald Trump’s reckless assault on even the possibility of principled statecraft.

Marshall’s 1942 formula has become an impediment to sound policy. My guess is that, faced with the facts at hand, the general would have been the first to agree. He was known to tell subordinates, “Don’t fight the problem, decide it.” Yet before deciding, it’s necessary to see the problem for what it is and, in this instance, perhaps also to see ourselves as we actually are.

For the United States today, the problem turns out to be similar to the one that beset the nation during the period leading up to World War II: not isolationism but overstretch, compounded by indolence. The present-day disparities between our aspirations, commitments, and capacities to act are enormous.

The core questions, submerged today as they were on the eve of U.S. entry into World War II, are these: What does freedom require? How much will it cost? And who will pay?