Republicans and Democrats disagree today on many issues, but they are united in their resolve that the United States must remain the world’s greatest military power. This bipartisan commitment to maintaining American supremacy has become a political signature of our times. In its most benign form, the consensus finds expression in extravagant and unremitting displays of affection for those who wear the uniform. Considerably less benign is a pronounced enthusiasm for putting our soldiers to work “keeping America safe.” This tendency finds the United States more or less permanently engaged in hostilities abroad, even as presidents from both parties take turns reiterating the nation’s enduring commitment to peace.

To be sure, this penchant for military activism attracts its share of critics. Yet dissent does not imply influence. The trivializing din of what passes for news drowns out the antiwar critique. One consequence of remaining perpetually at war is that the political landscape in America does not include a peace party. Nor, during presidential-election cycles, does that landscape accommodate a peace candidate of voter consequence. The campaign now in progress has proved no exception. Candidates calculate that tough talk wins votes. They are no more likely to question the fundamentals of U.S. military policy than to express skepticism about the existence of a deity. Principled opposition to war ranks as a disqualifying condition, akin to having once belonged to the Communist Party or the KKK. The American political scene allows no room for the intellectual progeny of Jane Addams, Eugene V. Debs, Dorothy Day, or Martin Luther King Jr.

So, this November, voters will choose between rival species of hawks. Each of the finalists will insist that freedom’s survival hinges on having in the Oval Office a president ready and willing to employ force, even as each will dodge any substantive assessment of what acting on that impulse has produced of late. In this sense, the outcome of the general election has already been decided. As regards so-called national security, victory is ensured. The status quo will prevail, largely unexamined and almost entirely intact.

Citizens convinced that U.S. national-security policies are generally working well can therefore rest easy. Those not sharing that view, meanwhile, might wonder how it is that military policies that are manifestly defective — the ongoing accumulation of unwon wars providing but one measure — avoid serious scrutiny, with critics of those policies consigned to the political margins.

History provides at least a partial answer to this puzzle. The constructed image of the past to which most Americans habitually subscribe prevents them from seeing other possibilities, a condition for which historians themselves bear some responsibility. Far from encouraging Americans to think otherwise, these historians have effectively collaborated with those interests that are intent on suppressing any popular inclination toward critical reflection. This tunnel vision affirms certain propositions that are dear to American hearts, preeminently the conviction that history itself has summoned the United States to create a global order based on its own self-image. The resulting metanarrative unfolds as a drama in four acts: in the first, Americans respond to but then back away from history’s charge; in the second, they indulge in an interval of adolescent folly, with dire consequences; in the third, they reach maturity and shoulder their providentially assigned responsibilities; in the fourth, after briefly straying off course, they stage an extraordinary recovery. When the final curtain in this drama falls, somewhere around 1989, the United States is the last superpower standing.

For Americans, the events that established the twentieth century as their century occurred in the military realm: two misleadingly named “world wars” separated by an “interwar period” during which the United States ostensibly took a time-out, followed by a so-called Cold War that culminated in decisive victory despite being inexplicably marred by Vietnam. To believe in the lessons of this melodrama — which warn above all against the dangers of isolationism and appeasement — is to accept that the American Century should last in perpetuity. Among Washington insiders, this view enjoys a standing comparable to belief in the Second Coming among devout Christians.

For Americans, the events that established the twentieth century as their century occurred in the military realm: two misleadingly named “world wars” separated by an “interwar period” during which the United States ostensibly took a time-out, followed by a so-called Cold War that culminated in decisive victory despite being inexplicably marred by Vietnam. To believe in the lessons of this melodrama — which warn above all against the dangers of isolationism and appeasement — is to accept that the American Century should last in perpetuity. Among Washington insiders, this view enjoys a standing comparable to belief in the Second Coming among devout Christians.

Unfortunately, in the United States these lessons retain little relevance. Whatever the defects of current U.S. policy, isolationism and appeasement do not number among them. With its military active in more than 150 countries, the United States today finds itself, if anything, overextended. Our principal security challenges — the risks to the planet posed by climate change, the turmoil enveloping much of the Islamic world and now spilling into the West, China’s emergence as a potential rival to which Americans have mortgaged their prosperity — will not yield to any solution found in the standard Pentagon repertoire. Yet when it comes to conjuring up alternatives, the militarized history to which Americans look for instruction has little to offer.

Prospects for thinking otherwise require an altogether different historical frame. Shuffling the deck — reimagining our military past — just might produce lessons that speak more directly to our present predicament.

Consider an alternative take on the twentieth-century U.S. military experience, with a post-9/11 codicil included for good measure. Like the established narrative, this one also consists of four episodes: a Hundred Years’ War for the Hemisphere, launched in 1898; a War for Pacific Dominion, also initiated in 1898, petering out in the 1970s but today showing signs of reviving; a War for the West, already under way when the United States entered it in 1917 and destined to continue for seven more decades; and a War for the Greater Middle East, dating from 1980 and ongoing still with no end in sight.

In contrast to the more familiar four-part narrative, these several military endeavors bear no more than an incidental relationship to one another. Even so, they resemble one another in this important sense: each found expression as an expansive yet geographically specific military enterprise destined to extend across several decades. Each involved the use (or threatened use) of violence against an identifiable adversary or set of adversaries.

In contrast to the more familiar four-part narrative, these several military endeavors bear no more than an incidental relationship to one another. Even so, they resemble one another in this important sense: each found expression as an expansive yet geographically specific military enterprise destined to extend across several decades. Each involved the use (or threatened use) of violence against an identifiable adversary or set of adversaries.

Yet for historians inclined to think otherwise, the analytically pertinent question is not against whom U.S. forces fought but why. It’s what the United States was seeking to accomplish that matters most. Here, briefly, is a revised account of the wars defining the (extended) American Century, placing purpose or motive at the forefront.

In February 1898, the battleship U.S.S. Maine, at anchor in Havana Harbor, blew up and sank, killing 266 American sailors. Widely viewed at the time as an act of state-sponsored terrorism, this incident initiated what soon became a War for the Hemisphere.

Two months later, vowing to deliver Cubans from oppressive colonial rule, the United States Congress declared war on Spain. Within weeks, however, the enterprise evolved into something quite different. After ousting Cuba’s Spanish overseers, the United States disregarded the claims of nationalists calling for independence, subjected the island to several years of military rule, and then converted it into a protectorate that was allowed limited autonomy. Under the banner of anti-imperialism, a project aimed at creating an informal empire had commenced.

America’s intervention in Cuba triggered a bout of unprecedented expansionism. By the end of 1898, U.S. forces had also seized Puerto Rico, along with various properties in the Pacific. These actions lacked a coherent rationale until Theodore Roosevelt, elevated to the presidency in 1901, took it on himself to fill that void. An American-instigated faux revolution that culminated with a newly founded Republic of Panama signing over to the United States its patrimony — the route for a transisthmian canal — clarified the hierarchy of U.S. interests. Much as concern about Persian Gulf oil later induced the United States to assume responsibility for policing that region, so concern for securing the as yet unopened canal induced it to police the Caribbean.

America’s intervention in Cuba triggered a bout of unprecedented expansionism. By the end of 1898, U.S. forces had also seized Puerto Rico, along with various properties in the Pacific. These actions lacked a coherent rationale until Theodore Roosevelt, elevated to the presidency in 1901, took it on himself to fill that void. An American-instigated faux revolution that culminated with a newly founded Republic of Panama signing over to the United States its patrimony — the route for a transisthmian canal — clarified the hierarchy of U.S. interests. Much as concern about Persian Gulf oil later induced the United States to assume responsibility for policing that region, so concern for securing the as yet unopened canal induced it to police the Caribbean.

In 1904, Roosevelt’s famous “corollary” to the Monroe Doctrine, claiming for the United States authority to exercise “international police power” in the face of “flagrant . . . wrongdoing or impotence,” provided a template for further action. Soon thereafter, U.S. forces began to intervene at will throughout the Caribbean and Central America, typically under the guise of protecting American lives and property but in fact to position the United States as regional suzerain. Within a decade, Haiti, the Dominican Republic, and Nicaragua joined Cuba and Panama on the roster of American protectorates. Only in Mexico, too large to occupy and too much in the grip of revolutionary upheaval to tame, did U.S. military efforts to impose order come up short.

“Yankee imperialism” incurred costs, however, not least of all by undermining America’s preferred self-image as benevolent and peace-loving, and therefore unlike any other great power in history. To reduce those costs, beginning in the 1920s successive administrations sought to lower the American military profile in the Caribbean basin. The United States was now content to allow local elites to govern so long as they respected parameters established in Washington. Here was a workable formula for exercising indirect authority, one that prioritized order over democracy, social justice, and the rule of law.

By 1933, when Franklin Roosevelt inaugurated his Good Neighbor policy with the announcement that “the definite policy of the United States from now on is one opposed to armed intervention,” the War for the Hemisphere seemed largely won. Yet neighborliness did not mean that U.S. military forces were leaving the scene. As insurance against backsliding, Roosevelt left intact the U.S. bases in Cuba and Puerto Rico, and continued to garrison Panama.

So rather than ending, the Hundred Years’ War for the Hemisphere had merely gone on hiatus. In the 1950s, the conflict resumed and even intensified, with Washington now defining threats to its authority in ideological terms. Leftist radicals rather than feckless caudillos posed the problem. During President Dwight D. Eisenhower’s first term, a CIA-engineered coup in Guatemala tacitly revoked FDR’s nonintervention pledge and appeared to offer a novel way to enforce regional discipline without actually committing U.S. troops. Under President John F. Kennedy, the CIA tried again, in Cuba. That was just for starters.

Between 1964 and 1994, U.S. forces intervened in the Dominican Republic, Grenada, Panama, and Haiti, in most cases for the second or third time. Nicaragua and El Salvador also received sustained American attention. In the former, Washington employed methods that were indistinguishable from terrorism to undermine a regime it viewed as illegitimate. In the latter, it supported an ugly counterinsurgency campaign to prevent leftist guerrillas from overthrowing right-wing oligarchs. Only in the mid-1990s did the Hundred Years’ War for the Hemisphere once more begin to subside. With the United States having forfeited its claim to the Panama Canal and with U.S.–Cuban relations now normalized, it may have ended for good.

Today the United States enjoys unquestioned regional primacy, gained at a total cost of fewer than a thousand U.S. combat fatalities, even counting the luckless sailors who went down with the Maine. More difficult to say with certainty is whether a century of interventionism facilitated or complicated U.S. efforts to assert primacy in its “own back yard.” Was coercion necessary? Or might patience have produced a similar outcome? Still, in the end, Washington got what it wanted. Given the gaping imbalance of power between the Colossus of the North and its neighbors, we may wonder whether the final outcome was ever in doubt.

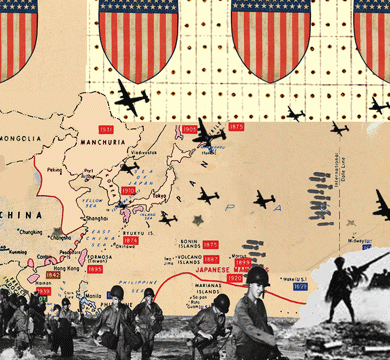

During its outward thrust of 1898, the United States seized the entire Philippine archipelago, along with smaller bits of territory such as Guam, Wake, and the Hawaiian Islands. By annexing the Philippines, U.S. authorities enlisted in a high-stakes competition to determine the fate of the Western Pacific, with all parties involved viewing China as the ultimate prize. Along with traditional heavyweights such as France, Great Britain, and Russia, the ranks of the competitors included two emerging powers. One was the United States, the other imperial Japan. Within two decades, thanks in large part to the preliminary round of the War for the West, the roster had thinned considerably, putting the two recent arrivals on the path for a showdown.

The War for Pacific Dominion confronted the U.S. military with important preliminary tasks. Obliging Filipinos to submit to a new set of colonial masters entailed years of bitter fighting. More American soldiers died pacifying the Philippines between 1899 and 1902 than were to lose their lives during the entire Hundred Years’ War for the Hemisphere. Yet even as U.S. forces were struggling in the Philippines, orders from Washington sent them venturing more deeply into Asia. In 1900, several thousand American troops deployed to China to join a broad coalition (including Japan) assembled to put down the so-called Boxer Rebellion. Although the expedition had a nominally humanitarian purpose — Boxers were murdering Chinese Christians while laying siege to legations in Peking’s diplomatic quarter — its real aim was to preserve the privileged status accorded foreigners in China. In that regard, it succeeded, thereby giving a victory to imperialism.

Through its participation in this brief campaign, the United States signaled its own interest in China. A pair of diplomatic communiqués known as the Open Door Notes codified Washington’s position by specifying two non-negotiable demands: first, to preserve China’s territorial integrity; and second, to guarantee equal opportunity for all the foreign powers engaged in exploiting that country. Both of these demands would eventually put the United States and Japan at cross-purposes. To substantiate its claims, the United States established a modest military presence in China. At Tientsin, two days’ march from Peking, the U.S. Army stationed an infantry regiment. The U.S. Navy ramped up its patrols on the Yangtze River between Shanghai and Chungking — more or less the equivalent of Chinese gunboats today traversing the Mississippi River between New Orleans and Minneapolis.

Through its participation in this brief campaign, the United States signaled its own interest in China. A pair of diplomatic communiqués known as the Open Door Notes codified Washington’s position by specifying two non-negotiable demands: first, to preserve China’s territorial integrity; and second, to guarantee equal opportunity for all the foreign powers engaged in exploiting that country. Both of these demands would eventually put the United States and Japan at cross-purposes. To substantiate its claims, the United States established a modest military presence in China. At Tientsin, two days’ march from Peking, the U.S. Army stationed an infantry regiment. The U.S. Navy ramped up its patrols on the Yangtze River between Shanghai and Chungking — more or less the equivalent of Chinese gunboats today traversing the Mississippi River between New Orleans and Minneapolis.

U.S. and Japanese interests in China proved to be irreconcilable. In hindsight, a violent collision between these two rising powers appears almost unavoidable. As wide as the Pacific might be, it was not wide enough to accommodate the ambitions of both countries. Although a set of arms-limiting treaties negotiated at the Washington Naval Conference of 1921–22 put a momentary brake on the rush toward war, that pause could not withstand the crisis of the Great Depression. Once Japanese forces invaded Manchuria in 1931 and established the puppet state of Manchukuo, the options available to the United States had reduced to two: either allow the Japanese a free hand in China or muster sufficient power to prevent them from having their way. By the 1930s, the War for Pacific Dominion had become a zero-sum game.

To recurring acts of Japanese aggression in China Washington responded with condemnation and, eventually, punishing economic sanctions. What the United States did not do, however, was reinforce its Pacific outposts to the point where they could withstand serious assault. Indeed, the Navy and War departments all but conceded that the Philippines, impulsively absorbed back in the heady days of 1898, were essentially indefensible.

At odds with Washington over China, Japanese leaders concluded that the survival of their empire hinged on defeating the United States in a direct military confrontation. They could see no alternative to the sword. Nor, barring an unexpected Japanese capitulation to its demands, could the United States. So the December 7, 1941, attack on Pearl Harbor came as a surprise only in the narrow sense that U.S. commanders underestimated the prowess of Japan’s aviators.

That said, the ensuing conflict was from the outset a huge mismatch. Only in willingness to die for their country did the Japanese prove equal to the Americans. By every other measure — military-age population, raw materials, industrial capacity, access to technology — they trailed badly. Allies exacerbated the disparity, since Japan fought virtually alone. Once FDR persuaded his countrymen to go all out to win — after Pearl Harbor, not a difficult sell — the war’s eventual outcome was not in doubt. When the incineration of Hiroshima and Nagasaki ended the fighting, the issue of Pacific dominion appeared settled. Having brought their principal foe to its knees, the Americans were now in a position to reap the rewards.

In the event, things were to prove more complicated. Although the United States had thwarted Japan’s efforts to control China, developments within China itself soon dashed American expectations of enjoying an advantageous position there. The United States “lost” it to communist revolutionaries who ousted the regime that Washington had supported against the Japanese. In an instant, China went from ally to antagonist.

So U.S. forces remained in Japan, first as occupiers and then as guarantors of Japanese security (and as a check on any Japanese temptation to rearm). That possible threats to Japan were more than theoretical became evident in the summer of 1950, when war erupted on the nearby Korean peninsula. A mere five years after the War for Pacific Dominion had seemingly ended, G.I.’s embarked on a new round of fighting.

The experience proved an unhappy one. Egregious errors of judgment by the Americans drew China into the hostilities, making the war longer and more costly than it might otherwise have been. When the end finally came, it did so in the form of a painfully unsatisfactory draw. Yet with the defense of South Korea now added to Washington’s list of obligations, U.S. forces stayed on there as well.

In the eyes of U.S. policymakers, Red China now stood as America’s principal antagonist in the Asia–Pacific region. Viewing the region through rose-tinted glasses, Washington saw communism everywhere on the march. So in American eyes a doomed campaign by France to retain its colonies in Indochina became part of a much larger crusade against communism on behalf of freedom. When France pulled the plug in Vietnam, in 1954, the United States effectively stepped into its role. An effort extending across several administrations to erect in Southeast Asia a bulwark of anticommunism aligned with the United States exacted a terrible toll on all parties involved and produced only one thing of value: machinations undertaken by President Richard Nixon to extricate the United States from a mess of its own making persuaded him to reclassify China not as an ideological antagonist but as a geopolitical collaborator.

As a consequence, the rationale for waging war in Vietnam in the first place — resisting the onslaught of the Red hordes — also faded. With it, so too did any further impetus for U.S. military action in the region. The War for Pacific Dominion quieted down appreciably, though it didn’t quite end. With China now pouring its energies into internal development, Americans found plentiful opportunities to invest and indulge their insatiable appetite for consumption. True, a possible renewal of fighting in Korea remained a perpetual concern. But when your biggest worry is a small, impoverished nation-state that is unable even to feed itself, you’re doing pretty well.

As far as the Pacific is concerned, Americans may end up viewing the last two decades of the twentieth century and the first decade of the twenty-first as a sort of golden interlude. The end of that period may now be approaching. Uncertainty about China’s intentions as a bona fide superpower is spooking other nearby nations, not least of all Japan. That another round of competition for the Pacific now looms qualifies at the very least as a real possibility.

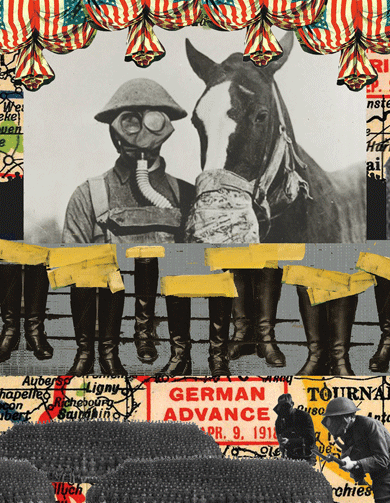

For the United States, the War for the West began in 1917, when President Woodrow Wilson persuaded Congress to enter a stalemated European conflict that had been under way since 1914. The proximate cause of the U.S. decision to intervene was the resumption of German U-boat attacks on American shipping. To that point, U.S. policy had been one of formal neutrality, a posture that had not prevented the United States from providing Germany’s enemies, principally Great Britain and France, with substantial assistance, both material and financial. The Germans had reason to be miffed.

For the war’s European participants, the issue at hand was as stark as it was straightforward. Through force of arms, Germany was bidding for continental primacy; through force of arms, Great Britain, France, and Russia were intent on thwarting that bid. To the extent that ideals figured among the stated war aims, they served as mere window dressing. Calculations related to Machtpolitik overrode all other considerations.

President Wilson purported to believe that America’s entry into the war, ensuring Germany’s defeat, would vanquish war itself, with the world made safe for democracy — an argument that he advanced with greater passion and eloquence than logic. Here was the cause for which Americans sent their young men to fight in Europe: the New World was going to redeem the Old.

It didn’t work out that way. The doughboys made it to the fight, but belatedly. Even with 116,000 dead, their contribution to the final outcome fell short of being decisive. When the Germans eventually quit, they appealed for a Wilsonian “peace without victory.” The Allies had other ideas. Their conception of peace was to render Germany too weak to pose any further danger. Meanwhile, Great Britain and France wasted little time claiming the spoils, most notably by carving up the Ottoman Empire and thereby laying the groundwork for what would eventually become the War for the Greater Middle East.

When Wilson’s grandiose expectations of a world transformed came to naught, Americans concluded — not without cause — that throwing in with the Allies had been a huge mistake. What observers today mischaracterize as “isolationism” was a conviction, firmly held by many Americans during the 1920s and 1930s, that the United States should never again repeat that mistake.

According to myth, that conviction itself produced an even more terrible conflagration, the European conflict of 1939–45, which occurred (at least in part) because Americans had second thoughts about their participation in the war of 1914–18 and thereby shirked their duty to intervene. Yet this is the equivalent of blaming a drunken brawl between rival street gangs on members of Alcoholics Anonymous meeting in a nearby church basement.

Although the second European war of the twentieth century differed from its predecessor in many ways, it remained at root a contest to decide the balance of power. Once again, Germany, now governed by nihilistic criminals, was making a bid for primacy. This time around, the Allies had a weaker hand, and during the war’s opening stages they played it poorly. Fortunately, Adolf Hitler came to their rescue by committing two unforced errors. Even though Joseph Stalin was earnestly seeking to avoid a military confrontation with Germany, Hitler removed that option by invading the Soviet Union in June 1941. Franklin Roosevelt had by then come to view the elimination of the Nazi menace as a necessity, but only when Hitler obligingly declared war on the United States, days after Pearl Harbor, did the American public rally behind that proposition.

In terms of the war’s actual conduct, only the United States was in a position to exercise any meaningful choice, whereas Great Britain and the Soviet Union responded to the dictates of circumstance. Exercising that choice, the Americans left the Red Army to bear the burden of fighting. In a decision that qualifies as shrewd or perfidious depending on your point of view, the United States waited until the German army was already on the ropes in the east before opening up a real second front.

The upshot was that the Americans (with Anglo-Canadian and French assistance) liberated the western half of Europe while conceding the eastern half to Soviet control. In effect, the prerogative of determining Europe’s fate thereby passed into non-European hands. Although out of courtesy U.S. officials continued to indulge the pretense that London and Paris remained centers of global power, this was no longer actually the case. By 1945 the decisions that mattered were made in Washington and Moscow.

So rather than ending with Germany’s second defeat, the War for the West simply entered a new phase. Within months, the Grand Alliance collapsed and the prospect of renewed hostilities loomed, with the United States and the Soviet Union each determined to exclude the other from Europe. During the decades-long armed standoff that ensued, both sides engaged in bluff and bluster, accumulated vast arsenals that included tens of thousands of nuclear weapons, and mounted impressive displays of military might, all for the professed purpose of preventing a “cold” war from turning “hot.”

Germany remained a source of potential instability, because that divided country represented such a coveted (or feared) prize. Only after 1961 did a semblance of stability emerge, as the erection of the Berlin Wall reduced the urgency of the crisis by emphasizing that it was not going to end anytime soon. All parties concerned concluded that a Germany split in two was something they could live with.

By the 1960s, armed conflict (other than through gross miscalculation) appeared increasingly improbable. Each side devoted itself to consolidating its holdings while attempting to undermine the other side’s hold on its allies, puppets, satellites, and fraternal partners. For national-security elites, managing this competition held the promise of a bountiful source of permanent employment. When Mikhail Gorbachev decided, in the late 1980s, to call the whole thing off, President Ronald Reagan numbered among the few people in Washington willing to take the offer seriously. Still, in 1989 the Soviet–American rivalry ended. So, too, if less remarked on, did the larger struggle dating from 1914 within which the so-called Cold War had formed the final chapter.

In what seemed, misleadingly, to be the defining event of the age, the United States had prevailed. The West was now ours.

Among the bequests that Europeans handed off to the United States as they wearied of exercising power, none can surpass the Greater Middle East in its problematic consequences. After the European war of 1939–45, the imperial overlords of the Islamic world, above all Great Britain, retreated. In a naïve act of monumental folly, the United States filled the vacuum left by their departure.

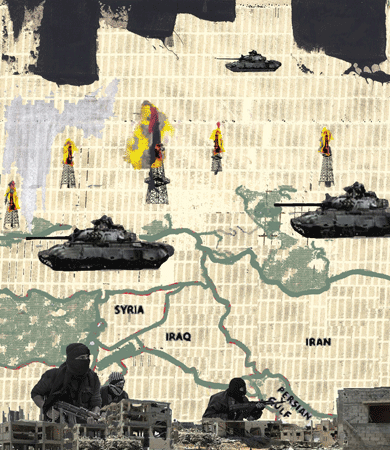

For Americans, the War for the Greater Middle East kicked off in 1980, when President Jimmy Carter designated the Persian Gulf a vital U.S. national-security interest. The Carter Doctrine, as the president’s declaration came to be known, initiated the militarizing of America’s Middle East policy, with next to no appreciation for what might follow.

During the successive “oil shocks” of the previous decade, Americans had made clear their unwillingness to tolerate any disruption to their oil-dependent lifestyle, and, in an immediate sense, the purpose of the War for the Greater Middle East was to prevent the recurrence of such disagreeable events. Yet in its actual implementation, the ensuing military project became much more than simply a war for oil.

In the decades since Carter promulgated his eponymous doctrine, the list of countries in the Islamic world that U.S. forces have invaded, occupied, garrisoned, bombed, or raided, or where American soldiers have killed or been killed, has grown very long indeed. Since 1980, that list has included Iraq and Afghanistan, of course, but also Iran, Lebanon, Libya, Turkey, Kuwait, Saudi Arabia, Qatar, Bahrain, the United Arab Emirates, Jordan, Bosnia, Kosovo, Yemen, Sudan, Somalia, Pakistan, and Syria. Of late, several West African nations with very large or predominantly Muslim populations have come in for attention. At times, U.S. objectives in the region have been specific and concrete. At other times, they have been broad and preposterously gauzy. Overall, however, Washington has found reasons aplenty to keep the troops busy. They arrived variously promising to keep the peace, punish evildoers, liberate the oppressed, shield the innocent, feed the starving, avert genocide or ethnic cleansing, spread democracy, and advance the cause of women’s rights. Rarely have the results met announced expectations.

In sharp contrast with the Hundred Years’ War for the Hemisphere, U.S. military efforts in the Greater Middle East have not contributed to regional stability. If anything, the reverse is true. Hopes of achieving primacy comparable to what the United States gained by 1945 in its War for Pacific Dominion remain unfulfilled and appear increasingly unrealistic. As for “winning,” in the sense that the United States ultimately prevailed in the War for the West, the absence of evident progress in the theaters that have received the most U.S. military attention gives little cause for optimism.

To be fair, U.S. troops have labored under handicaps. Among the most severe has been the absence of common agreement regarding the mission. Apart from the brief period of 2002–2006 when George W. Bush fancied that what ailed the Greater Middle East was the absence of liberal democracy (with his Freedom Agenda the needed antidote), policymakers have struggled to define the mission that American troops are expected to fulfill. The recurring inclination to define the core issue as “terrorism,” with expectations that killing “terrorists” in sufficient numbers should put things right, exemplifies this difficulty. Reliance on such generic terms amounts to a de facto admission of ignorance.

When contemplating the world beyond their own borders, many Americans — especially those in the midst of campaigning for high office — reflexively adhere to a dichotomous teleology of good versus evil and us versus them. The very “otherness” of the Greater Middle East itself qualifies the region in the eyes of most Americans as historically and culturally alien. U.S. military policy there has been inconsistent, episodic, and almost entirely reactive, with Washington cobbling together a response to whatever happens to be the crisis of the moment. Expediency and opportunism have seldom translated into effectiveness.

Consider America’s involvement in four successive Gulf Wars over the past thirty-five years. In Gulf War I, which began in 1980, when Iraq invaded Iran, and lasted until 1988, the United States provided both covert and overt support to Saddam Hussein, even while secretly supplying arms to Iran. In Gulf War II, which began in 1990, when Iraq invaded Kuwait, the United States turned on Saddam. Although the campaign to oust his forces from Kuwait ended in apparent victory, Washington decided to keep U.S. troops in the region to “contain” Iraq. Without attracting serious public attention, Gulf War II thereby continued through the 1990s. In Gulf War III, the events of 9/11 having rendered Saddam’s continued survival intolerable, the United States in 2003 finished him off and set about creating a new political order more to Washington’s liking. U.S. forces then spent years vainly trying to curb the anarchy created by the invasion and subsequent occupation of Iraq.

Unfortunately, the eventual withdrawal of U.S. troops at the end of 2011 marked little more than a brief pause. Within three years, Gulf War IV had commenced. To prop up a weak Iraqi state now besieged by a new enemy, one whose very existence was a direct result of previous U.S. intervention, the armed forces of the United States once more returned to the fight. Although the specifics varied, U.S. military actions since 1980 in Islamic countries as far afield as Afghanistan, Lebanon, Libya, and Somalia have produced similar results — at best they have been ambiguous, more commonly disastrous.

As for the current crop of presidential candidates vowing to “smash the would-be caliphate” (Hillary Clinton), “carpet bomb them into oblivion” (Ted Cruz), and “bomb the hell out of the oilfields” (Donald Trump), Americans would do well to view such promises with skepticism. If U.S. military power offers a solution to all that ails the Greater Middle East, then why hasn’t the problem long since been solved?

Lessons drawn from this alternative narrative of twentieth-century U.S. military history have no small relevance to the present day. Among other things, the narrative demonstrates that the bugaboos of isolationism and appeasement are pure inventions.

If isolationism defined U.S. foreign policy during the 1920s and 1930s, someone forgot to let the American officer corps in on the secret. In 1924, for example, Brigadier General Douglas MacArthur was commanding U.S. troops in the Philippines. Lieutenant Colonel George C. Marshall was serving in China as the commander of the 15th Infantry. Major George S. Patton was preparing to set sail for Hawaii and begin a stint as a staff officer at Schofield Barracks. Dwight D. Eisenhower’s assignment in the Pacific still lay in the future; in 1924, Major Eisenhower’s duty station was Panama. The indifference of the American people may have allowed that army to stagnate intellectually and materially. But those who served had by no means turned their backs on the world.

As for appeasement, hang that tag on Neville Chamberlain and Édouard Daladier, if you like. But as a description of U.S. military policy over the past century, it does not apply. Since 1898, apart from taking an occasional breather, the United States has shown a strong and consistent preference for activism over restraint and for projecting power abroad rather than husbanding it for self-defense. Only on rare occasions have American soldiers and sailors had reason to complain of being underemployed. So although the British may have acquired their empire “in a fit of absence of mind,” as apologists once claimed, the same cannot be said of Americans in the twentieth century. Not only in the Western Hemisphere but also in the Pacific and Europe, the United States achieved preeminence because it sought preeminence.

In the Greater Middle East, the site of our most recent war, a similar quest for preeminence has now foundered, with the time for acknowledging the improbability of it ever succeeding now at hand. Such an admission just might enable Americans to see how much the global landscape has changed since the United States made its dramatic leap into the ranks of great powers more than a century ago, as well as to extract insights of greater relevance than hoary old warnings about isolationism and appeasement.

The first insight pertains to military hegemony, which turns out to be less than a panacea. In the Western Hemisphere, for example, the undoubted military supremacy enjoyed by the United States is today largely beside the point. The prospect of hostile outside powers intruding in the Americas, which U.S. policymakers once cited as a justification for armed intervention, has all but disappeared.

Yet when it comes to actually existing security concerns, conventional military power possesses limited utility. Whatever the merits of gunboat diplomacy as practiced by Teddy Roosevelt and Wilson or by Eisenhower and JFK, such methods won’t stem the flow of drugs, weapons, dirty money, and desperate migrants passing back and forth across porous borders. Even ordinary Americans have begun to notice that the existing paradigm for managing hemispheric relations isn’t working — hence the popular appeal of Donald Trump’s promise to “build a wall” that would remove a host of problems with a single stroke. However bizarre and impractical, Trump’s proposal implicitly acknowledges that with the Hundred Years’ War for the Hemisphere now a thing of the past, fresh thinking is in order. The management of hemispheric relations requires a new paradigm, in which security is defined chiefly in economic rather than in military terms and policing is assigned to the purview of police agencies rather than to conventional armed forces. In short, it requires the radical demilitarization of U.S. policy. In the Western Hemisphere, apart from protecting the United States itself from armed attack, the Pentagon needs to stand down.

The second insight is that before signing up to fight for something, we ought to make sure that something is worth fighting for. When the United States has disregarded this axiom, it has paid dearly. In this regard, the annexation of the Philippines, acquired in a fever of imperial enthusiasm at the very outset of the War for Pacific Dominion, was a blunder of the first order. When the fever broke, the United States found itself saddled with a distant overseas possession for which it had little use and which it could not properly defend. Americans may, if they wish, enshrine the ensuing saga of Bataan and Corregidor as glorious chapters in U.S. military history. But pointless sacrifice comes closer to the truth.

By committing itself to the survival of South Vietnam, the United States replicated the error of its Philippine commitment. The fate of the Vietnamese south of the 17th parallel did not constitute a vital interest of the United States. Yet once we entered the war, a reluctance to admit error convinced successive administrations that there was no choice but to press on. A debacle of epic proportions ensued.

Jingoists keen to insert the United States today into minor territorial disputes between China and its neighbors should take note. Leave it to the likes of John Bolton, a senior official during the George W. Bush Administration, to advocate “risky brinkmanship” as the way to put China in its place. Others will ask how much value the United States should assign to the question of what flag flies over tiny island chains such as the Paracels and Spratlys. The answer, measured in American blood, amounts to milliliters.

During the twentieth century, achieving even transitory dominion in the Pacific came at a very high price. In three big fights, the United States came away with one win, one draw, and one defeat. Seeing that one win as a template for the future would be a serious mistake. Few if any of the advantages that enabled the United States to defeat Japan seventy years ago will pertain to a potential confrontation with China today. So unless Washington is prepared to pay an even higher price to maintain Pacific dominion, it may be time to define U.S. objectives there in more modest terms.

A third insight encourages terminating obligations that have become redundant. Here the War for the West is particularly instructive. When that war abruptly ended in 1989, what had the United States won? As it turned out, less than met the eye. Although the war’s conclusion found Europe “whole and free,” as U.S. officials incessantly proclaimed, the epicenter of global politics had by then moved elsewhere. The prize for which the United States had paid so dearly had in the interim lost much of its value.

Americans drawn to the allure of European culture, food, and fashion have yet to figure this out. Hence the far greater attention given to the occasional terrorist attack in Paris than to comparably deadly and more frequent incidents in places such as Nigeria or Egypt or Pakistan. Yet events in those countries are likely to have as much bearing, if not more, on the fate of the planet than anything occurring in the tenth or eleventh arrondissement.

Furthermore, “whole and free” has not translated into “reliable and effective.” Visions of a United States of Europe partnering with the United States of America to advance common interests and common values have proved illusory. The European Union actually resembles a loose confederation, with little of the cohesion that the word “union” implies. Especially in matters related to security, the E.U. combines ineptitude with irresolution, a point made abundantly clear during the Balkan crises of the 1990s and reiterated since.

Granted, Americans rightly prefer a pacified Europe to a totalitarian one. Yet rather than an asset, Europe today has become a net liability, with NATO having evolved into a mechanism for indulging European dependency. The Western alliance that was forged to deal with the old Soviet threat has survived and indeed expanded ever eastward, having increased from sixteen members in 1990 to twenty-eight today. As the alliance enlarges, however, it sheds capability. Allowing their own armies to waste away, Europeans count on the United States to pick up the slack. In effect, NATO provides European nations an excuse to dodge their most fundamental responsibility: self-defense.

Nearly a century after Americans hailed the kaiser’s abdication, more than seventy years after they celebrated Hitler’s suicide, and almost thirty years after they cheered the fall of the Berlin Wall, a thoroughly pacified Europe cannot muster the wherewithal to deal even with modest threats such as post-Soviet Russia. For the United States to indulge this European inclination to outsource its own security might make sense if Europe itself still mattered as much as it did when the War for the West began. But it does not. Indeed, having on three occasions over the course of eight decades helped prevent Europe from being dominated by a single hostile power, the United States has more than fulfilled its obligation to defend Western civilization. Europe’s problems need no longer be America’s.

Finally, there is this old lesson, evident in each of the four wars that make up our alternative narrative but acutely present in the ongoing War for the Greater Middle East. That is the danger of allowing moral self-delusion to compromise political judgment. Americans have a notable penchant for seeing U.S. troops as agents of all that is good and holy pitted against the forces of evil. On rare occasions, and even then only loosely, the depiction has fit. Far more frequently, this inclination has obscured both the moral implications of American actions and the political complexities underlying the conflict to which the United States has made itself a party.

Indulging the notion that we live in a black-and-white world inevitably produces military policies that are both misguided and morally dubious. In the Greater Middle East, the notion has done just that, exacting costs that continue to mount daily as the United States embroils itself more deeply in problems to which our military power cannot provide an antidote. Perseverance is not the answer; it’s the definition of insanity. Thinking otherwise would be a first step toward restoring sanity. Reconfiguring the past so as to better decipher its meaning offers a first step toward doing just that.